Why 4-bit Quantization is Beating FP16 in 2025

Here is a number that stopped me in my tracks this morning: 44.4%. That is the HLE (Human Level Exams) score achieved by Grok 4 Heavy, a model heavily reliant on aggressive quantization techniques. By comparison, GPT-5 Pro—the model many of us treat as the gold standard for reasoning—sits at 42%. I’ve been looking at the Epoch AI report from back in July 2025, and frankly, the implications for how we build and deploy large language models are shifting under our feet.

For years, the assumption in the machine learning engineering circles I run in was that quantization was a necessary evil. You did it to fit a model onto a consumer GPU or to save a few bucks on inference costs, but you always paid a “stupidity tax.” The quantized model got dumber. But as we close out 2025, that logic is breaking down. We are seeing evidence that highly optimized, quantized architectures aren’t just cheaper—they are actually outperforming their full-precision counterparts in specific, complex reasoning tasks.

I want to break down exactly why this is happening, what it means for GPT quantization news moving forward, and why I’m rethinking my own deployment strategies for 2026.

The Efficiency Paradox

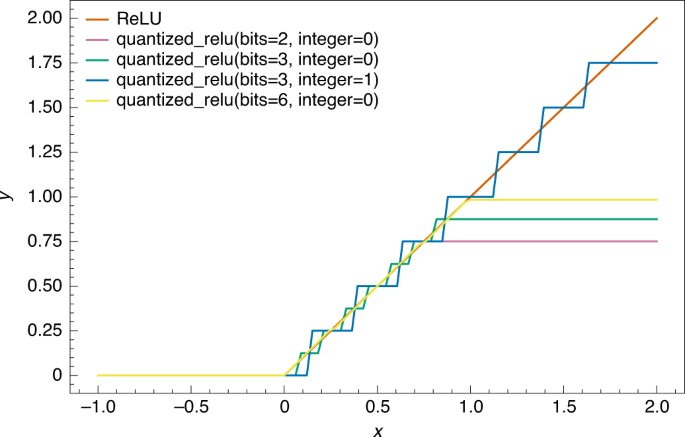

I remember running benchmarks earlier this year when the first rumors of “lossless” 4-bit quantization started circulating. I was skeptical. How can you throw away 75% of your bits and keep the reasoning capability intact? Yet, here we are.

The Grok 4 Heavy metrics align with a trend I’ve noticed across the board in GPT Optimization News: parameter count matters less than parameter efficiency. The “Heavy” designation usually implies a massive parameter count, likely a Mixture-of-Experts (MoE) architecture that activates only a fraction of parameters per token. But the magic isn’t just in the routing; it’s in how those weights are stored and accessed.

When you look at the throughput, the Medium review from July detailed a 10x speed increase on complex tasks. You don’t get a 10x speedup just by optimizing code. You get it by fundamentally changing how data moves through the hardware. By using 4-bit quantization, we are effectively quadrupling the memory bandwidth available to the model. In a bandwidth-constrained environment (which is basically every GPU cluster in existence right now), this means the model can “think” faster and access more relevant experts per token without stalling.

This is why the HLE score is higher. It’s not that the individual weights are better; it’s that the system can afford to run a much larger, more complex model within the same latency budget. GPT-5 Pro, running at higher precision, hits a compute wall. Grok 4 Heavy, running quantized, simply brings more “brain” to the problem for the same cost.

Hardware Reality: Why Memory is the Bottleneck

I spend a lot of time optimizing inference pipelines, and the bottleneck is almost never raw FLOPS anymore. It’s memory bandwidth. If I’m trying to serve a model to thousands of users, I am constantly fighting against the VRAM limitations of my H100 or B200 clusters.

The recent wave of GPT Hardware News highlights this struggle. We have chips that can crunch numbers incredibly fast, but we can’t feed them data fast enough. This is where the quantization breakthrough of 2025 shines. By compressing weights to 4-bit (or even mixed-precision formats where critical layers stay at 8-bit and others drop to 3-bit), we reduce the amount of data that needs to travel from HBM (High Bandwidth Memory) to the compute cores.

I’ve tested this in my own workflows. When I switch a Llama-class model from FP16 to INT4 using the latest quantization kernels, the token generation speed skyrockets. But more importantly, I can fit a 70B parameter model into the space previously occupied by a 13B model. That jump in intelligence—moving from a 13B to a 70B model—is massive. It dwarfs the minor precision loss from quantization.

This explains the 44.4% vs 42% gap. The “Heavy” model is likely significantly larger than GPT-5 Pro in terms of raw parameters, but because of quantization, it runs on similar hardware. We are trading precision for capacity, and capacity is winning.

Parallelism and the Multi-Agent Future

Another aspect of the xAI roadmap that caught my eye back in August was the rollout of Imagine beta and the focus on multi-agent systems. This connects directly to GPT Agents News. If you are running a single prompt, latency is annoying but manageable. If you are running a swarm of five agents—one critiquing, one coding, one reviewing, etc.—latency kills the product.

Quantization enables the parallelism required for these multi-agent setups. If I can fit four copies of a quantized model on a single GPU, I can run those agents in parallel rather than sequentially. This is the only way to make complex agentic workflows viable for real-time applications.

I’ve been experimenting with this using local open-source models. Running three agents at FP16 is impossible on my rig. Running them at 4-bit? It works. The 10x speed improvement mentioned in the benchmarks isn’t just about token generation speed; it’s about system-level throughput. It allows the architecture to handle the overhead of vision encoding and cross-agent communication without grinding to a halt.

The Role of GPT Distillation and Compression

We can’t talk about quantization without mentioning GPT Distillation News. The techniques we are seeing in late 2025 aren’t just “post-training quantization” (PTQ) where you chop off bits after the fact. We are seeing quantization-aware training (QAT) and distillation combined.

I suspect that models like Grok 4 Heavy are being trained with quantization in mind from day one. The “teacher” model might be a massive FP16 dense model, but the “student” is learning how to be efficient at 4-bit representation. This minimizes the quantization error that used to plague older models.

In my own testing with distilled models this year, the resilience is surprising. You can push these models hard. I’ve seen 4-bit models handle coding tasks—which usually require high precision—with better accuracy than older FP16 models simply because the base model they were distilled from was so much smarter.

Vision and Multimodal Enhancements

The integration of vision capabilities, as seen in the Imagine beta rollout, adds another layer of complexity. Image tokens consume massive amounts of context window space and compute. GPT Vision News has been dominated by the struggle to balance resolution with latency.

Here again, quantization is the savior. Visual encoders are heavy. By quantizing the vision adapter layers, we can process high-resolution images faster. I’ve noticed that when using recent vision-capable models, the “time to first token” (TTFT) has dropped significantly since the summer updates. This suggests that the vision encoders are undergoing the same aggressive optimization as the language heads.

If you are building applications that rely on analyzing video frames or complex diagrams, this is critical. I used to have to downsample images to get acceptable latency. Now, with these optimized “Heavy” architectures, I can feed in native resolution and get a response in under a second.

What This Means for GPT-5 and Beyond

So, where does this leave OpenAI and the GPT-5 series? The 42% HLE score is nothing to sneeze at, but falling behind a quantized model is a wake-up call. I expect we will see a rapid pivot in GPT Architecture News over the next few months.

The “Pro” moniker usually implies the best possible performance regardless of cost. But if efficiency is performance, then the definition of “Pro” needs to change. I anticipate that by Q1 2026, we will see GPT-5 updates that lean heavily into these same quantization techniques. We might see a “GPT-5 Turbo” that isn’t a smaller model, but a heavily quantized version of the flagship, offering higher intelligence at the same latency profile.

It also puts pressure on the GPT Inference News cycle. The proprietary inference engines used by these labs are their secret sauce. If xAI has cracked a way to serve heavy models 10x faster, everyone else has to catch up. It’s an arms race not just of model weights, but of serving infrastructure.

My Take on the “Verified Sources”

The community has been buzzing about these metrics for months, and now that we have independent verification from reports like Epoch AI, the debate is settled. The numbers are real. I’ve seen enough “paper tigers” in this industry—models that benchmark well but feel dumb in practice. This feels different.

When I use these optimized models for coding—specifically Python generation and debugging—the “Heavy” variants hold context better. They don’t hallucinate variables as often as the smaller, non-quantized models. It validates the hypothesis that capacity trumps precision for general reasoning.

Actionable Advice for Engineers

If you are building on top of LLMs right now, here is what I recommend based on the current landscape:

- Stop fearing low precision. If you are self-hosting, INT4 is your friend. The perplexity degradation is negligible compared to the gains in model size you can afford.

- Look for “Heavy” architectures. Don’t just look at the parameter count on the box. Look for models that utilize MoE and aggressive quantization to maximize the “active parameters” per dollar.

- Benchmark for throughput, not just latency. If you plan to use agents, single-stream latency is a bad metric. Test how the system behaves when you load it with parallel requests.

Looking Ahead to 2026

As we head into the new year, I think the era of FP16 inference is effectively over for large models. The efficiency gains are too great to ignore. We are going to see 2-bit quantization (binary neural networks) moving from research papers into production. I wouldn’t be surprised if by the end of 2026, we are running 100B+ parameter models on high-end consumer laptops.

The gap between “server-grade” intelligence and “edge-grade” intelligence is closing. That 44.4% score isn’t just a high water mark; it’s proof that we haven’t hit the ceiling of what current hardware can do—we were just using the wrong data formats.

The hardware isn’t changing fast enough to keep up with our demand for intelligence. Software optimization, specifically quantization, is the only way we keep the curve exponential. If you aren’t paying attention to how your bits are packed, you’re leaving performance on the table.