Beyond the Glitch: A Deep Dive into GPT Tokenization and Its Critical Role in AI Stability

The Unseen Engine: Understanding the Core of GPT Language Processing

Users of large language models (LLMs) like ChatGPT have occasionally witnessed moments of bizarre, nonsensical output—a stream of repetitive phrases, mismatched words, or pure gibberish. While often dismissed as a temporary “glitch,” these incidents offer a rare and valuable window into the intricate, foundational mechanics of how these powerful AI systems operate. At the heart of this process lies a critical, yet often overlooked, component: tokenization. This is the fundamental first step that translates human language into a format a machine can understand. Far from being a simple conversion, tokenization is a complex field filled with trade-offs that directly impact model performance, efficiency, and even stability. This article delves deep into the world of GPT Tokenization News, exploring what happens when this crucial process goes awry, the ongoing innovations in the field, and the practical implications for developers and users alike. Understanding tokenization is key to appreciating the full scope of both the power and the fragility of modern AI.

Section 1: The Foundations of GPT Tokenization: From Text to Tensors

Before any GPT model can compose a sonnet, write a line of code, or answer a question, it must first deconstruct the input text into a numerical representation. This process is the bedrock of natural language processing and is essential for the entire AI pipeline, influencing everything from training efficiency to the quality of the final output.

The Bridge Between Human Language and Machine Logic

At its core, tokenization is the process of breaking down a sequence of text into smaller units called “tokens.” These tokens can be words, parts of words (subwords), or even individual characters. A model like GPT-4 doesn’t “read” the word “apple”; instead, it sees a numerical ID, such as ‘35512’, which corresponds to the token “apple” in its predefined vocabulary. This vocabulary is a massive dictionary, created during the model’s training phase, that maps unique tokens to specific integers.

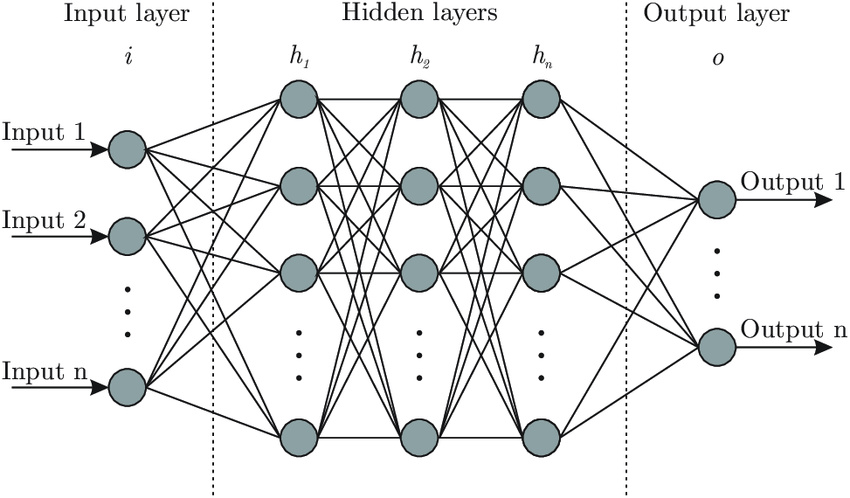

Why is this necessary? Neural networks, the foundation of GPT Architecture News, operate on mathematical data—specifically, vectors and matrices (tensors). They cannot process raw text strings. Tokenization serves as the essential bridge, converting the fluid, unstructured world of human language into the rigid, numerical format that machine learning models require. The choice of tokenization strategy profoundly affects the model’s ability to understand nuance, grammar, and new or rare words.

Common Tokenization Algorithms: BPE, WordPiece, and SentencePiece

Early NLP models used simple methods like splitting text by spaces, but this approach fails spectacularly with compound words, punctuation, and languages that don’t use spaces. Modern GPT models, following trends in GPT Training Techniques News, primarily use a subword tokenization algorithm called Byte-Pair Encoding (BPE).

BPE works by starting with a vocabulary of individual characters and iteratively merging the most frequently occurring adjacent pairs of units. For example, it might first merge ‘h’ and ‘a’ into ‘ha’, then ‘ha’ and ‘p’ into ‘hap’, and so on. This allows the model to:

- Handle any word: Even a word it has never seen before can be broken down into known subword or character tokens. This effectively eliminates the “out-of-vocabulary” problem.

- Capture morphological meaning: Common prefixes and suffixes like “un-“, “re-“, or “-ing” often become their own tokens, allowing the model to understand the grammatical structure of words like “unhappiness” (tokenized as “un”, “happi”, “ness”).

- Balance vocabulary size and sequence length: It keeps the vocabulary size manageable while preventing sentences from becoming excessively long sequences of individual characters.

While BPE is central to OpenAI’s models, it’s worth noting other popular algorithms in the GPT Ecosystem News, such as WordPiece (used by Google’s BERT) and SentencePiece, which is language-agnostic and treats the input text as a raw stream of Unicode characters, making it highly effective for GPT Multilingual News.

Section 2: When Tokens Go Awry: Analyzing Glitches and Performance Bottlenecks

The tokenization process, while elegant, is a source of significant complexity and potential failure. Recent public incidents of models producing nonsensical output highlight the fragility of this system. When the conversion from text to tokens or, more often, from tokens back to text breaks down, the model’s coherence collapses.

The “Gibberish” Phenomenon: A Look Under the Hood

The strange, looping, or nonsensical text that sometimes emerges from a model is often a symptom of a failure in the final decoding step. The model itself might be generating a perfectly valid sequence of token IDs, but the system responsible for converting these numbers back into human-readable text fails. This can happen for several reasons:

- Vocabulary Mismatch: An inference engine or API endpoint might be using a slightly different tokenizer vocabulary than the one the core model was trained on. This could cause a specific token ID to map to the wrong text or to nothing at all, leading to errors.

- Beam Search or Sampling Anomalies: During text generation, algorithms like beam search are used to pick the most likely next token. Under certain conditions, especially with unusual prompts or specific temperature settings, these algorithms can get stuck in a repetitive loop of tokens, producing endless streams of the same phrase.

- Software Bugs: As with any complex software system, a simple bug in the code that handles the detokenization process can lead to catastrophic output failures. This is a crucial area of focus in GPT Safety News, as predictable and stable output is paramount.

These events underscore the importance of robust engineering in GPT Deployment News and the need for rigorous testing across the entire model pipeline, not just the core neural network.

Out-of-Vocabulary (OOV) and Subword Splitting Issues

While BPE mitigates the classic OOV problem, it introduces its own set of challenges. Highly specialized or rare terms, common in fields like medicine or law, often get fragmented into many small, semantically weak tokens. For example, a term like “immunosuppressant” might be split into “im”, “muno”, “suppress”, “ant”. This fragmentation can make it harder for the model to grasp the full, precise meaning of the term, a significant concern for professionals relying on GPT in Healthcare News or GPT in Legal Tech News. This can also subtly introduce issues related to GPT Bias & Fairness News, as names or cultural terms not well-represented in the training data may be fragmented, leading to poorer performance for certain demographic groups.

Performance Implications: Latency, Throughput, and Cost

Tokenization has a direct and measurable impact on performance. The core computational cost of a GPT model is related to the number of tokens it processes. A verbose prompt or a document filled with complex words that break into many sub-tokens will be more expensive and slower to process than a concise prompt using common words. This is a critical factor in GPT Latency & Throughput News. Developers using the API are billed per token, so inefficient tokenization directly translates to higher operational costs. Optimizing prompts to use “token-cheap” language is a key skill for building scalable and cost-effective GPT Applications News.

Section 3: The Evolving Landscape of Tokenization and Multimodality

The challenges and limitations of current tokenization methods have made it a hotbed of innovation. As models grow in scale and capability, the pressure to develop more efficient, intelligent, and versatile tokenization strategies is mounting, representing a key frontier in GPT Research News.

Beyond BPE: The Quest for Better Tokenizers

Researchers are actively exploring alternatives to BPE that could provide a richer semantic understanding. One major area of interest is in “token-free” or byte-level models. These models operate directly on the raw bytes of UTF-8 text, completely bypassing a predefined vocabulary. The primary advantage is their universality—they can process any text in any language without a specialized tokenizer. However, this comes at a steep computational cost, as a sequence of bytes is much longer than a sequence of subword tokens, demanding more resources, which is a key topic in GPT Hardware News and discussions around GPT Efficiency News. As hardware and optimization techniques improve, these methods may become more viable for future models, potentially influencing the direction of GPT-5 News.

Multilingual and Multimodal Challenges

Creating a single, unified tokenizer for hundreds of languages is a monumental task. Languages with complex scripts like Chinese or agglutinative languages like Turkish pose unique challenges for algorithms like BPE, which were primarily developed with English in mind. This is a central problem in creating truly global and equitable AI, a core theme in GPT Multilingual News and GPT Cross-Lingual News.

The challenge is even greater in the realm of multimodal AI. For models discussed in GPT Vision News, the concept of a “token” must be extended to images. The dominant approach, inspired by the Vision Transformer (ViT), is to break an image into a grid of smaller patches. Each patch is then flattened and projected into a vector, becoming a “visual token.” These visual tokens are then fed into the transformer architecture alongside text tokens. The efficiency and granularity of this image tokenization process are critical for the model’s ability to understand visual details, making it a key aspect of GPT Multimodal News.

The Impact on Future Models and Applications

The next generation of LLMs will likely feature more sophisticated tokenization schemes. We can anticipate larger vocabularies optimized for a wider range of languages and domains, including programming languages, which is vital for improving GPT Code Models News. A more efficient tokenizer is also a prerequisite for handling the ever-increasing context windows of models. Processing a 1 million token context window requires a highly optimized pipeline, from tokenization to inference. These advancements will enable more powerful GPT Agents News that can process vast amounts of information and create richer experiences in fields like GPT in Gaming News and GPT in Content Creation News.

Section 4: Practical Considerations and Best Practices for Developers

For developers building on top of GPT models, understanding tokenization isn’t just an academic exercise—it’s a practical necessity for building robust, efficient, and cost-effective applications. Interacting with GPT APIs News requires a token-aware mindset.

Understanding and Managing Token Limits

Every GPT model has a maximum context window, a limit on the number of tokens it can process at once (input prompt + generated output). This limit is a hard constraint.

- Tip 1: Count Your Tokens. Before sending a request to an API, always use a library like OpenAI’s

tiktokento calculate the exact number of tokens in your prompt. This allows you to avoid errors from exceeding the context limit and helps you accurately predict costs. - Tip 2: Remember the “Words to Tokens” Ratio. As a rule of thumb, for English text, 100 tokens is roughly equivalent to 75 words. This ratio can vary significantly based on the complexity of the text and the language used.

Prompt Engineering for Optimal Tokenization

The way you phrase your prompts can impact their token count and, consequently, their cost and processing time.

- Tip 3: Be Clear and Concise. Avoid unnecessary verbosity. While providing context is important, ensure every word serves a purpose.

- Tip 4: Watch for Spacing and Special Characters. Multiple spaces, newlines, and complex Unicode characters can sometimes be treated as separate tokens or influence how adjacent words are tokenized. Clean, simple formatting is often more token-efficient.

Fine-Tuning and Custom Models

When working with GPT Fine-Tuning News, the tokenizer is generally fixed. You are adapting the model’s weights, not its vocabulary. It’s crucial to ensure that the data you use for fine-tuning is preprocessed in a way that is compatible with the base model’s tokenizer. Feeding it text with unusual formatting or characters that tokenize poorly can degrade the performance of your GPT Custom Models News. This is a key consideration for anyone working with specialized GPT Platforms News or internal GPT Tools News.

Conclusion: The Silent Foundation of AI’s Future

Tokenization is the invisible yet indispensable foundation upon which the entire edifice of large language models is built. While it operates silently in the background, its design and implementation have far-reaching consequences for everything from model stability and performance to cost and fairness. The occasional public “meltdown” serves as a powerful reminder that these systems are not magic; they are complex, engineered artifacts with specific points of failure. As we look toward the GPT Future News, advancements in tokenization—whether through more efficient algorithms, token-free architectures, or seamless multimodal integration—will be a critical driver of progress. For developers, researchers, and even curious users, understanding this fundamental layer is no longer optional. It is the key to unlocking the full potential of AI and building the next generation of intelligent, reliable, and efficient applications that will define our world.