The New Era of Accessible AI: A Deep Dive into OpenAI’s Groundbreaking Open-Source GPT Models

A Paradigm Shift in AI: OpenAI Unleashes Powerful, Accessible Open-Source Models

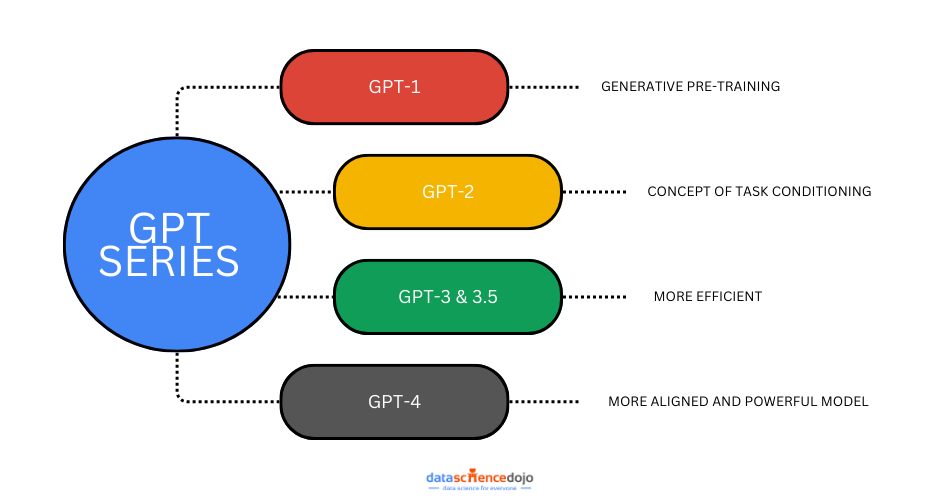

In a move that has sent ripples across the entire technology landscape, the latest OpenAI GPT News signals a monumental shift in the company’s strategy and the broader AI ecosystem. OpenAI has officially released two new, powerful, and, most importantly, open-source Generative Pre-trained Transformer models. This development, a significant departure from their recent focus on proprietary APIs, democratizes access to state-of-the-art AI capabilities, empowering developers, researchers, and enterprises like never before. The release of a highly efficient 120-billion parameter model capable of running on a single GPU and a nimble 20-billion parameter model designed for local execution on consumer hardware is not just another entry in the long list of GPT Models News; it represents a fundamental change in how high-performance AI is developed, deployed, and utilized. This article provides a comprehensive technical analysis of these new models, exploring their architecture, performance benchmarks, and the profound implications for everything from edge computing to enterprise-grade AI safety.

Unveiling the New Models: Specifications and Key Features

The latest GPT Open Source News centers on two distinct yet complementary models, each engineered to address different segments of the market. They offer a compelling blend of performance and efficiency, challenging existing leaders in the open-source space and providing a viable alternative to proprietary systems.

gpt-oss-120b: The Powerhouse on a Single GPU

The flagship of this release is the gpt-oss-120b, a 120-billion parameter model that remarkably operates on a single, high-end consumer or data center GPU. This achievement in GPT Efficiency News is made possible through a revolutionary approach to model design and optimization. Key to its performance is an advanced GPT Quantization technique, reportedly a novel 4-bit hybrid scheme that drastically reduces the model’s memory and computational footprint without a significant loss in accuracy. This addresses a major bottleneck in GPT Deployment News, making large model inference accessible without requiring massive, multi-GPU server clusters.

Early GPT Benchmark News suggests that gpt-oss-120b’s performance in reasoning, summarization, and code generation is highly competitive with established models in the GPT-3.5 and even GPT-4-mini class. For developers, this means achieving superior GPT Latency & Throughput on manageable hardware, such as a single NVIDIA H100 or even an RTX 4090 with 24GB of VRAM. This breakthrough in GPT Hardware News lowers the barrier to entry for startups and research labs, enabling them to build and deploy sophisticated GPT Applications News that were previously cost-prohibitive.

gpt-oss-20b: High-Fidelity AI for the Edge

Complementing its larger sibling, the gpt-oss-20b is a 20-billion parameter model meticulously optimized for local and edge deployments. This model is a game-changer for GPT Edge News, designed to run efficiently on modern laptops, including Apple’s M-series MacBooks and PCs with consumer-grade GPUs, and even on CPU-only configurations with sufficient RAM. Its architecture is a masterclass in GPT Compression and optimization, delivering a responsive and powerful experience for on-device applications.

The potential applications are vast, ranging from highly responsive GPT Assistants and GPT Chatbots that operate entirely offline, ensuring total data privacy, to intelligent features embedded directly into software. This has massive implications for GPT Applications in IoT, where low-latency, on-device processing is critical. The model’s release will likely spur a new wave of innovation in privacy-first applications and tools that leverage local AI power.

A Technical Deep Dive: Architecture and Training Innovations

The remarkable performance-to-efficiency ratio of these new models is not accidental; it is the result of significant advancements in model architecture, training methodologies, and post-training optimization. This section delves into the technical underpinnings that make these models a landmark in GPT Research News.

Architectural Advancements and Training Data

While full details are still emerging, the GPT Architecture News points towards a hybrid Mixture-of-Experts (MoE) structure for the gpt-oss-120b. In an MoE model, only a fraction of the total parameters (the “experts”) are activated for any given input token, dramatically reducing the computational cost of inference. This allows the model to have a large parameter count, which contributes to its knowledge and nuance, while maintaining an inference cost closer to a much smaller, dense model. This is a crucial piece of GPT Scaling News, demonstrating a path toward larger models that remain computationally feasible.

Furthermore, OpenAI has emphasized the quality and ethical sourcing of the GPT Datasets used for training. This focus is a direct response to growing industry concerns and is central to the GPT Ethics News surrounding the release. By curating a more balanced and diverse training corpus, the models aim to mitigate some of the inherent issues highlighted in GPT Bias & Fairness News. The models also feature an improved GPT Tokenization system, enhancing their proficiency in handling diverse languages and dialects, which is a significant update for GPT Multilingual News and GPT Cross-Lingual applications.

The Magic of Efficiency: Quantization and Distillation

The secret sauce behind these models lies in cutting-edge optimization techniques. The term GPT Quantization refers to the process of reducing the numerical precision of a model’s weights (e.g., from 32-bit floating-point numbers to 8-bit or 4-bit integers). The gpt-oss-120b leverages a state-of-the-art quantization method that preserves model fidelity to an unprecedented degree, making its single-GPU deployment possible. This is a critical development for anyone working with GPT Inference Engines and GPT Optimization.

For the gpt-oss-20b, it is widely speculated that GPT Distillation played a key role. This technique involves training a smaller “student” model (the 20b) to mimic the output and internal representations of a much larger, more powerful “teacher” model (perhaps an unreleased internal prototype related to GPT-5 News). This allows the smaller model to inherit the nuanced capabilities of its teacher, resulting in a model that punches far above its weight class in terms of performance.

Reshaping the AI Ecosystem: Implications for Developers and Enterprises

The release of these models is more than just a technical achievement; it is a strategic move that will reshape the entire GPT Ecosystem. It directly addresses the growing demand for transparency, control, and cost-effectiveness in AI development and deployment.

Democratizing AI Development and Fostering Innovation

By open-sourcing these models, OpenAI is empowering a global community of developers and researchers. The latest GPT Fine-Tuning News is that these models provide an exceptionally strong foundation for creating GPT Custom Models tailored to specific domains. A university can now fine-tune gpt-oss-20b for educational purposes, leading to new breakthroughs in GPT in Education News. Startups can build novel products without relying on expensive API calls, using a plethora of open-source GPT Tools and GPT Platforms like Hugging Face and Ollama for seamless GPT Integrations.

This will undoubtedly accelerate innovation in fields like GPT in Creativity News, with artists and writers using locally-run models for content generation, and GPT in Gaming News, where on-device models can power dynamic NPCs and procedural content generation without latency.

A New Standard for Enterprise AI and Safety

For enterprises, particularly those in regulated industries, this is a landmark event for GPT Safety News. The ability to inspect, audit, and self-host a powerful model is a critical requirement for sectors like finance, healthcare, and legal tech. This addresses major concerns related to GPT Privacy News and data sovereignty.

- GPT in Healthcare News: Hospitals can deploy gpt-oss-120b on-premise to analyze patient data without it ever leaving their secure network.

- GPT in Finance News: Financial institutions can build custom fraud detection and market analysis models that comply with strict data handling regulations.

- GPT in Legal Tech News: Law firms can use these models for document review and analysis with full confidentiality.

This move provides a robust, auditable alternative to both proprietary black-box APIs and models from regions with different data governance standards, directly influencing the conversation around GPT Regulation News.

Practical Applications and Best Practices

With these powerful new tools available, the focus now shifts to practical implementation and leveraging their full potential across various industries.

Real-World Use Cases

The immediate applications are broad and transformative. For instance, the gpt-oss-120b is ideal for powering sophisticated internal GPT Agents that can automate complex business workflows or act as an engine for advanced GPT in Content Creation News, generating high-quality marketing copy, reports, and technical documentation. Its prowess as a GPT Code Models News contender means it can be integrated into enterprise development pipelines for code generation, review, and debugging.

Meanwhile, the gpt-oss-20b will fuel a new generation of smart applications. Imagine IDE GPT Plugins News that provide instant, offline code completion, or marketing tools that offer real-time feedback on ad copy directly within a user’s browser, a key development for GPT in Marketing News.

Tips and Considerations for Deployment

While these models are highly accessible, effective deployment requires careful consideration. Here are some best practices:

- Hardware Selection: For gpt-oss-120b, ensure your GPU has sufficient VRAM (24GB+ recommended). For gpt-oss-20b, while it can run on a CPU, performance will be significantly better on a laptop with a dedicated GPU or Apple’s Neural Engine.

- Choose the Right Inference Engine: Leverage optimized GPT Inference Engines like vLLM, TensorRT-LLM, or llama.cpp to maximize GPT Inference News performance and minimize latency.

- Fine-Tuning Pitfalls: When creating GPT Custom Models, use techniques like LoRA (Low-Rank Adaptation) to fine-tune efficiently. Be mindful of “catastrophic forgetting,” where the model loses its general capabilities after being over-trained on a narrow dataset.

- Monitor Performance: Always track key metrics like GPT Latency & Throughput to ensure your application meets user expectations.

Conclusion: The Future of GPT is Open

The release of gpt-oss-120b and gpt-oss-20b is a watershed moment in the evolution of artificial intelligence. It represents a bold step by OpenAI towards a more open, collaborative, and accessible AI future, directly influencing the trajectory of GPT Trends News. By providing powerful, efficient, and auditable models, OpenAI has not only challenged its GPT Competitors News but has also equipped the global community with the tools to build the next generation of AI-powered solutions. This move lowers economic barriers, enhances enterprise safety and privacy, and will undoubtedly catalyze a wave of innovation across every conceivable industry. The key takeaway is clear: the era of high-performance, open-source AI is not a distant dream; it is here now, and it promises a more democratized and dynamic future for all.