GPT Platforms News: The New Era of AI Personalization with Chat Model Fine-Tuning

The Dawn of Hyper-Personalized AI: A Deep Dive into GPT Chat Model Fine-Tuning

The landscape of artificial intelligence is in a constant state of flux, with groundbreaking developments emerging at a breathtaking pace. For developers, businesses, and creators, the central challenge has shifted from simply accessing powerful generative models to tailoring them for specific, nuanced tasks. For years, prompt engineering has been the primary tool for customization—a delicate art of crafting the perfect input to elicit a desired output. While effective, this approach has its limits, often struggling to maintain a consistent personality, style, or specialized knowledge base over extended interactions. The latest GPT Platforms News signals a monumental shift in this paradigm. The ability to fine-tune advanced chat models like GPT-3.5-turbo is no longer a privilege reserved for a select few with massive computational resources; it’s becoming an accessible, powerful tool for the broader developer community. This evolution marks a pivotal moment in ChatGPT News, moving us beyond generic AI assistants and into an era of truly bespoke, hyper-personalized AI agents that can speak in a unique voice, understand specific contexts, and perform specialized functions with unprecedented accuracy.

Section 1: The Evolution of GPT Customization and the Fine-Tuning Revolution

Understanding the significance of this latest development requires looking at the progression of AI interaction and customization techniques. The journey from rigid, pre-programmed chatbots to adaptable, fine-tuned language models represents a quantum leap in the GPT Ecosystem News, fundamentally changing how we build and deploy AI-powered applications.

From Prompt Engineering to Model Specialization

Initially, customizing a large language model (LLM) was almost entirely dependent on prompt engineering. This involves providing the model with detailed instructions, few-shot examples, and contextual information within the prompt itself. For example, to make a chatbot act as a sarcastic pirate, one would prepend every conversation with a system message like, “You are a sarcastic pirate named One-Eyed Jack. You must answer all questions with pirate slang and a cynical tone.” While clever, this method is inefficient and brittle. The model’s inherent “personality” can still bleed through, and maintaining this persona across long conversations is computationally expensive due to ever-expanding context windows. This approach is a form of in-context learning, not a permanent change to the model’s behavior.

The Breakthrough: Fine-Tuning for Conversational Models

The latest GPT Fine-Tuning News announces a more profound method of customization. Fine-tuning is a process of supervised learning that updates the actual weights of a pre-trained model using a custom dataset. Instead of just telling the model how to behave at the start of each conversation, you are fundamentally retraining a small part of its “brain” to internalize a specific style, tone, or format. The recent availability of this feature for chat-optimized models like GPT-3.5-turbo is a game-changer. Previously, fine-tuning was primarily available for base completion models (like `davinci` or `babbage`), which were less suited for conversational back-and-forth. This OpenAI GPT News means developers can now create specialized GPT Chatbots News that are not only conversational but also inherently aligned with a specific brand voice, character persona, or functional style from the moment they are initialized.

Why This Matters for the GPT Ecosystem

This democratization of fine-tuning has massive implications. It lowers the barrier to entry for creating highly differentiated AI products. Startups can now build GPT Custom Models News that offer a unique user experience without needing to train a model from scratch. This fosters competition and innovation, pushing the entire field forward. For enterprises, it means customer service bots can be trained on transcripts from their best agents, marketing copy generators can learn the company’s exact brand voice, and internal tools can be tailored to specific corporate jargon and workflows. This is a significant update in the world of GPT APIs News, empowering a new wave of specialized GPT Applications News.

Section 2: A Technical Breakdown of the Chat Model Fine-Tuning Workflow

Moving from theory to practice, the process of fine-tuning a chat model involves a structured workflow centered on data preparation, API interaction, and model evaluation. The latest advancements in GPT Tools News and integrations are making this process more accessible than ever before.

Step 1: Curating and Formatting the Training Dataset

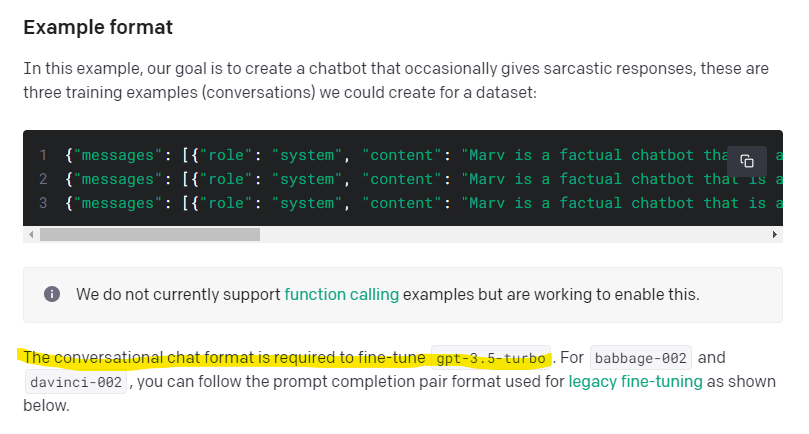

The success of any fine-tuning project hinges on the quality of the training data. The principle of “garbage in, garbage out” is especially true here. For chat models, the data must be formatted in a specific conversational structure. A typical training example consists of a sequence of messages, each with a `role` (`system`, `user`, or `assistant`) and `content`.

Here is a simplified example of a single training data point in JSONL format:

{"messages": [{"role": "system", "content": "You are Marv, a chatbot that reluctantly answers questions with sarcastic humor."}, {"role": "user", "content": "How many pounds are in a kilogram?"}, {"role": "assistant", "content": "Ugh, fine. It's about 2.2 pounds. Are we done here? I have important napping to do."}]}

A high-quality dataset should contain at least 50-100 of these high-quality examples. The key is consistency and quality over sheer quantity. The latest GPT Training Techniques News emphasizes that a smaller, well-curated dataset often outperforms a massive, noisy one. To facilitate this, new tools are emerging that act as “Chat Loaders.” These utilities can automatically parse and convert conversation histories from platforms like Slack, Discord, or iMessage into the required JSONL format, dramatically accelerating the data preparation phase. This is a critical piece of GPT Integrations News that streamlines the entire workflow.

Step 2: The Fine-Tuning Process via API

Once the dataset is prepared and validated, the fine-tuning process is initiated through an API call. The workflow typically looks like this:

- Upload Data: The formatted JSONL file is uploaded to the platform’s servers.

- Create Fine-Tuning Job: A new job is created via an API endpoint, specifying the training file ID and the base model to be fine-tuned (e.g., `gpt-3.5-turbo`).

- Monitoring: The platform queues the job and begins the training process. The status of the job can be monitored through the API. The duration depends on the dataset size and model, but it can range from minutes to several hours.

- Deployment: Upon successful completion, the platform provides a new model ID for your custom fine-tuned model (e.g., `ft:gpt-3.5-turbo:my-org:custom-name:1a2b3c4d`). This new model can then be used in standard API calls just like any other pre-trained model.

This streamlined process abstracts away the complexities of managing GPU clusters and training loops, making advanced GPT Deployment News accessible to any developer with API access.

Step 3: Evaluation and Iteration

Fine-tuning is not a one-shot process. After the first version of your model is ready, it’s crucial to evaluate its performance against a validation set. This involves testing its ability to maintain the desired tone, style, and accuracy. Key metrics to watch in GPT Benchmark News include not just correctness but also stylistic adherence. If the model exhibits “catastrophic forgetting”—losing its general reasoning abilities—or fails to adopt the target style, the dataset may need to be refined and the fine-tuning process repeated.

Section 3: Real-World Applications and Strategic Implications

The ability to fine-tune chat models unlocks a vast array of powerful, real-world applications across numerous industries, heralding significant developments in fields from finance to education.

Case Study 1: Hyper-Personalized Assistants and Productivity

Imagine a professional—a lawyer, for instance—who wants an AI assistant to help draft emails and legal briefs. By creating a dataset from their past sent emails and documents, they can fine-tune a model. This new custom model, a key development in GPT in Legal Tech News, would not only understand legal terminology but would also adopt the lawyer’s specific writing style, preferred phrasing, and formal tone. When asked to “draft an email to Client X about the discovery deadline,” the output would be remarkably close to what the lawyer would have written themselves, requiring minimal editing. This level of personalization makes GPT Assistants News a reality for professional productivity.

Case Study 2: Brand-Aligned Customer Support and Marketing

A company with a strong, quirky brand voice (e.g., a gaming company) can use fine-tuning to create a customer support chatbot that perfectly embodies that persona. By training a model on thousands of chat logs from their most popular and effective support agents, they can create a chatbot that is not just helpful but also engaging and on-brand. This has profound implications for GPT in Marketing News and GPT in Gaming News, as it transforms a cost-center (support) into a brand-building opportunity. The chatbot’s responses will be consistent, empathetic, and aligned with the company’s identity, improving customer satisfaction and loyalty.

Case Study 3: Specialized Tutors and Educational Tools

In the education sector, fine-tuning can create specialized AI tutors. A chemistry professor could fine-tune a model on their lecture notes, Q&A sessions, and textbook materials. The resulting AI, a significant update for GPT in Education News, could explain complex concepts like organic reaction mechanisms using the professor’s specific analogies and teaching methods. It could act as a 24/7 study partner for students, answering questions in a familiar and effective pedagogical style, thereby enhancing the learning experience far beyond what a generic model could achieve.

Section 4: Best Practices, Pitfalls, and the Future Outlook

While incredibly powerful, fine-tuning is not a silver bullet. Achieving optimal results requires a strategic approach, an awareness of potential pitfalls, and an eye toward the future of GPT Trends News.

Best Practices for Effective Fine-Tuning

- Prioritize Data Quality: A small, clean, and highly relevant dataset of 100 examples is far more effective than a noisy dataset of 10,000. Ensure every example perfectly demonstrates the desired output.

- Maintain Style Consistency: All examples in your dataset should consistently reflect the target style, tone, and format. Inconsistency will confuse the model.

- Start Simple and Iterate: Begin with a small, focused task and a modest dataset. Test the results and gradually expand the model’s capabilities rather than trying to teach it everything at once.

- Combine with Prompting: Fine-tuning is not a replacement for good prompt engineering. The best results often come from using a well-crafted system prompt with a fine-tuned model to guide its behavior further.

Common Pitfalls and Ethical Considerations

- Knowledge vs. Style: Remember that fine-tuning is primarily for teaching the model a new style or format, not for injecting new factual knowledge. The model will still rely on its pre-trained knowledge base and is prone to hallucination.

- Overfitting: If your dataset is too small or lacks diversity, the model might overfit, learning to simply repeat your examples verbatim and losing its ability to generalize.

– Privacy and Data Security: Using personal or proprietary data for fine-tuning raises significant concerns. It’s crucial to anonymize data and understand the platform’s data usage policies. This is a central topic in GPT Privacy News and GPT Regulation News.

– Bias Amplification: Fine-tuning can amplify any biases present in the training data. Careful curation and an awareness of GPT Bias & Fairness News are essential to building responsible AI.

The Road Ahead: GPT-4, Multimodality, and Efficiency

The future of fine-tuning is bright. As we look toward potential GPT-4 News and the speculative GPT-5 News, we can anticipate that fine-tuning capabilities will become even more powerful and accessible. The next frontier will likely involve GPT Multimodal News, enabling the fine-tuning of models that understand and generate not just text but also images and audio (GPT Vision News). Furthermore, ongoing research in GPT Efficiency News, including techniques like GPT Quantization and GPT Distillation, will make it possible to run these custom models more cheaply and even on edge devices, a key area of GPT Edge News.

Conclusion: A New Chapter in AI Personalization

The widespread availability of fine-tuning for advanced chat models represents a paradigm shift in the world of generative AI. It marks the transition from interacting with a single, monolithic AI to creating a diverse ecosystem of specialized, purpose-built models. This development empowers developers and organizations to build truly unique AI applications that carry a distinct voice, understand a specific domain, and deliver a superior user experience. By moving beyond the limitations of prompt engineering, we are unlocking the next wave of innovation in AI, where the focus is not just on raw capability but on refined, personalized, and context-aware intelligence. This is more than just an incremental update; it is a foundational change that will shape the future of human-computer interaction and the entire landscape of GPT Platforms News for years to come.