Breaking Barriers: The Latest in GPT Language Support and Multilingual AI News

In our increasingly interconnected world, the ability to communicate and operate across linguistic boundaries is no longer a luxury but a fundamental necessity. For decades, the digital language barrier has posed a significant challenge, creating friction in global commerce, scientific collaboration, and cultural exchange. However, a profound transformation is underway, driven by rapid advancements in Generative Pre-trained Transformer (GPT) models. Initially developed with a strong focus on English, these powerful AI systems are evolving into sophisticated multilingual powerhouses, capable of understanding, generating, and reasoning in dozens of languages. This evolution represents a paradigm shift, moving beyond simple translation to enable true cross-lingual understanding and interaction. This article delves into the latest GPT Language Support News, exploring the technical innovations, real-world applications, and future trends that are dismantling language barriers and paving the way for a more globally integrated digital ecosystem.

The Expanding Linguistic Horizons of GPT Models

The journey of large language models (LLMs) from monolingual curiosities to multilingual workhorses has been remarkably swift. This progress is not a single breakthrough but the result of concerted efforts in data collection, architectural refinement, and a strategic shift in the goals of AI development. The latest GPT Models News consistently highlights enhanced performance across a growing spectrum of languages, signaling a new era of global AI accessibility.

From Monolingual Roots to Global Fluency

Early iterations of GPT models were predominantly trained on English-language text, a reflection of the readily available data on the internet. While impressive, their utility was limited in a global context. The significant leap forward seen in recent GPT-3.5 News and, more dramatically, in GPT-4 News, was the deliberate inclusion of massive, multilingual datasets during the pre-training phase. This strategic shift has endowed these models with an innate ability to process and generate text in languages ranging from Spanish and Mandarin to Swahili and Hindi, often with surprising fluency. This move was a direct response to the global demand for AI solutions that could cater to diverse user bases, opening up new markets and applications that were previously inaccessible.

The Role of Massive, Diverse Datasets

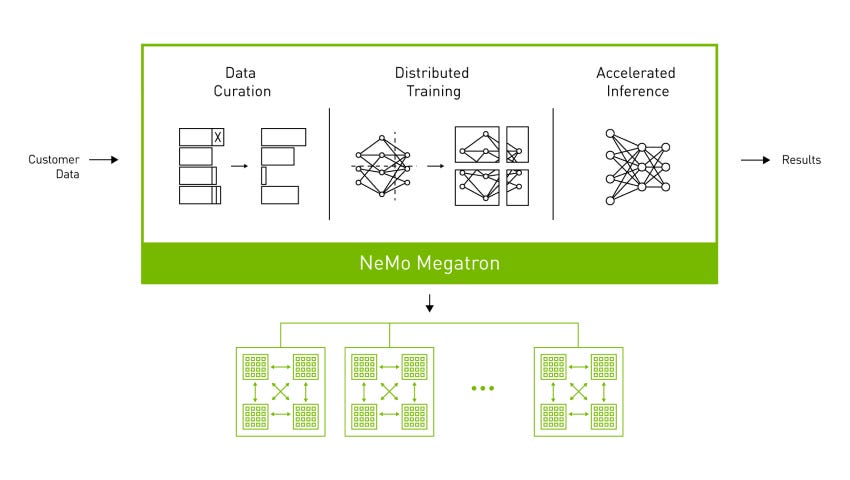

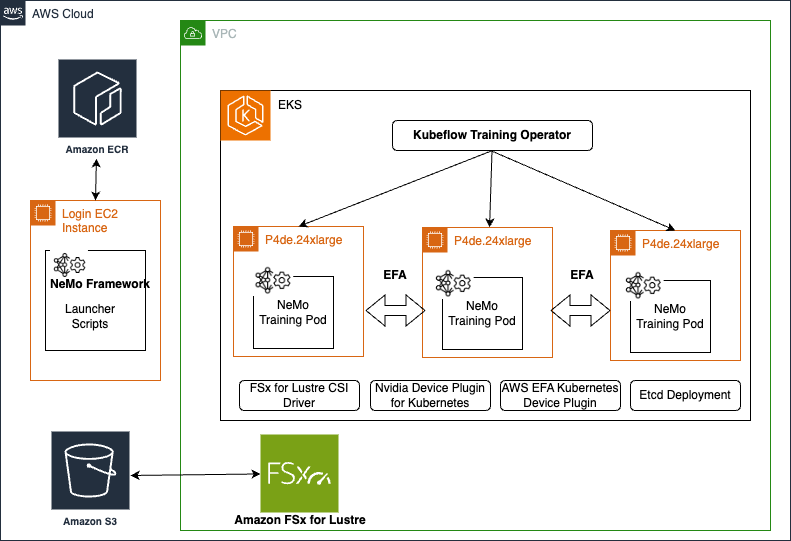

At the heart of any powerful language model is its training data. The secret to robust multilingual capability lies in training on a corpus that reflects the world’s linguistic diversity. According to the latest GPT Datasets News, model developers are increasingly curating petabytes of text from multilingual sources like Common Crawl, Wikipedia, digitized books, and public code repositories. However, a significant challenge remains: data imbalance. High-resource languages with a vast digital footprint naturally dominate these datasets, while low-resource languages are underrepresented. This disparity can lead to performance gaps, a critical issue that researchers are actively addressing through techniques like data augmentation and transfer learning to bolster support for less common languages.

Beyond Translation: True Cross-Lingual Understanding

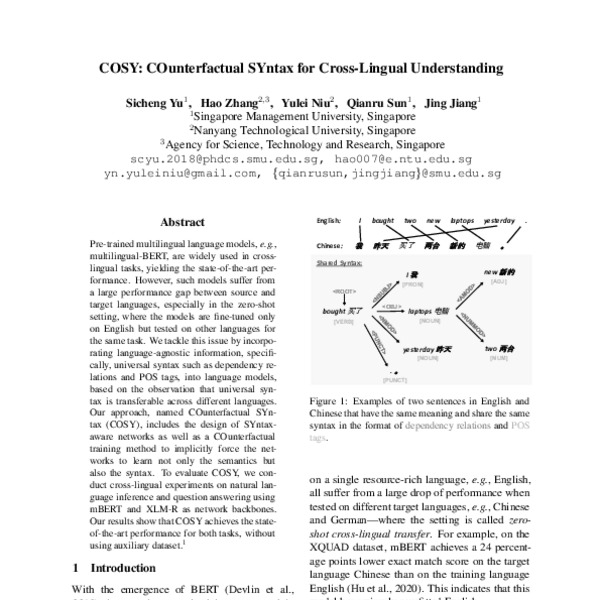

Modern multilingual GPTs are doing far more than just translating text from one language to another. The most exciting developments in GPT Cross-Lingual News revolve around their ability to perform complex tasks directly in various languages. This includes sentiment analysis of Japanese product reviews, summarizing German legal documents, or generating Python code from instructions written in French. This is made possible by a phenomenon known as cross-lingual transfer, where the model leverages its understanding of concepts and reasoning learned from high-resource languages and applies them to tasks in low-resource languages. This zero-shot or few-shot capability is a testament to the model’s generalized linguistic intelligence, allowing it to perform tasks it was never explicitly trained for in a specific language.

Under the Hood: The Architecture of Multilingual GPTs

Achieving genuine multilingual proficiency in a single AI model is a monumental feat of engineering. It requires sophisticated architectural designs and training techniques that allow the model to build a unified, language-agnostic representation of knowledge. Understanding these technical underpinnings is key to appreciating the current capabilities and future potential of GPT models.

Shared Vocabulary and Tokenization Strategies

A foundational challenge in multilingual modeling is tokenization—the process of breaking down text into smaller units (tokens) that the model can process. As covered in recent GPT Tokenization News, using a separate vocabulary for each language is inefficient and prevents the model from learning shared concepts. The solution lies in using advanced tokenizers like Byte-Pair Encoding (BPE) or SentencePiece. These algorithms create a single, unified vocabulary that contains sub-word units common across multiple languages. For instance, prefixes like “un-” or “re-” and suffixes like “-ation” or “-ing” appear in many Latin-based languages. By tokenizing at this sub-word level, the model can recognize linguistic similarities, making learning more efficient and enabling better performance on languages it has seen less frequently.

The Transformer Architecture’s Language-Agnostic Power

The core innovation behind GPT is the Transformer architecture, and recent GPT Architecture News continues to reinforce its remarkable flexibility. The self-attention mechanism, the engine of the Transformer, is inherently language-agnostic. It learns contextual relationships between tokens by weighing their importance relative to each other, regardless of the language’s specific grammatical rules. This allows the model to identify universal linguistic patterns—such as subject-verb-object relationships or the concept of negation—that transcend individual languages. This abstract, pattern-based understanding is what enables the powerful cross-lingual transfer capabilities that define modern multilingual systems.

Fine-Tuning and Customization for Specific Languages

While large, pre-trained models offer impressive general multilingual abilities, achieving state-of-the-art performance for specific, high-value applications often requires further specialization. This is where the latest GPT Fine-Tuning News becomes critical. A base multilingual model can be fine-tuned on a smaller, high-quality dataset for a particular language or domain. For example, a global financial institution can create GPT Custom Models News by fine-tuning a model on its proprietary dataset of Japanese financial reports to build a highly accurate analysis tool. This process adapts the model’s general knowledge to the specific vocabulary, syntax, and nuances of the target domain, dramatically improving its reliability and usefulness. This approach represents one of the most practical GPT Training Techniques News for enterprises looking to deploy specialized AI solutions.

From Theory to Practice: Multilingual GPTs in Action

The impact of enhanced GPT language support is being felt across virtually every industry. By breaking down communication barriers, these models are enabling new efficiencies, creating novel user experiences, and unlocking access to global markets. The latest GPT Applications News is filled with examples of how this technology is being deployed to solve real-world problems.

Global Customer Support and Hyper-Personalization

One of the most immediate applications is in customer service. Companies can now deploy AI-powered GPT Chatbots News and GPT Assistants News that can interact with customers in their native language, 24/7. A global e-commerce platform, for instance, can use a single multilingual GPT model to power its support system across dozens of countries. This system can understand local dialects, cultural nuances in politeness, and product-specific jargon, providing a seamless and personalized experience that was previously impossible to scale. This not only improves customer satisfaction but also significantly reduces the operational costs associated with maintaining multiple region-specific support teams.

Content Creation and Marketing on a Global Scale

In the creative industries, multilingual GPTs are revolutionizing how content is produced and distributed. The latest GPT in Marketing News shows that companies are moving beyond clunky, literal translations. Instead, they use AI to perform “transcreation”—adapting marketing messages to resonate with the cultural context of a target audience. A model can generate ad copy, social media posts, and blog articles that feel authentic and native to each market. This is a game-changer for GPT in Content Creation News, allowing brands to maintain a consistent global identity while speaking with a relevant local voice.

Breaking Down Barriers in Specialized Fields

The benefits extend deep into specialized professional domains:

- GPT in Legal Tech News: International law firms are using multilingual models to analyze contracts and case law from different jurisdictions, quickly identifying key clauses and potential conflicts without requiring a team of human translators for initial discovery.

- GPT in Healthcare News: Researchers can use AI to summarize and synthesize medical studies published in various languages, accelerating the pace of scientific discovery. Clinicians can use it to communicate more effectively with patients who speak a different language, ensuring better care.

- GPT in Education News: Educational platforms can create personalized learning materials that adapt to a student’s native language and cultural background, making high-quality education more accessible worldwide.

Navigating the Multilingual Maze: Challenges and Future Directions

Despite the incredible progress, the path to a truly seamless multilingual AI future is not without its obstacles. Addressing these challenges is the focus of current research and will define the next generation of GPT models.

The Persistent Challenge of Low-Resource Languages

The “digital language divide” remains a significant issue. Models are still far more proficient in high-resource languages like English and Chinese than in languages with a smaller digital footprint, such as many indigenous or regional languages. The latest GPT Research News is heavily focused on developing novel techniques, like advanced transfer learning and self-supervised methods, to improve performance on low-resource languages with limited training data. Closing this gap is essential for ensuring equitable access to AI technology globally.

Cultural Nuance and Bias: The Final Frontier

Language is deeply intertwined with culture. A model that is fluent may still fail if it does not grasp cultural context, idioms, humor, and social etiquette. Furthermore, as highlighted in GPT Bias & Fairness News, models trained on internet data can inadvertently learn and perpetuate harmful stereotypes and biases present in that data. A key area of GPT Ethics News involves developing methods to detect and mitigate these biases across different cultural contexts, ensuring that AI tools are fair, respectful, and safe for all users.

The Road Ahead: What’s Next in GPT Multilingual News?

The future of multilingual AI is incredibly bright, with several key trends on the horizon. The community eagerly awaits GPT-5 News, with expectations of even more deeply integrated and balanced multilingual capabilities from the ground up. The rise of GPT Multimodal News points to systems that will understand language in the context of images, audio, and video, a capability that will be crucial for true cross-cultural understanding. As covered in GPT Vision News, a model could soon explain a culturally specific visual meme in any language. Furthermore, the push towards GPT Edge News and advancements in GPT Optimization News, including quantization and distillation, will be vital for deploying these powerful multilingual models on local devices, ensuring low-latency performance for real-time applications like live translation. The vibrant GPT Open Source News community and healthy competition from GPT Competitors News will continue to accelerate this innovation, creating a richer and more diverse GPT Ecosystem News for everyone.

Conclusion

The rapid expansion of GPT language support is one of the most significant developments in artificial intelligence today. We have moved from an English-centric AI landscape to one where multilingual capabilities are becoming a standard expectation. Driven by architectural innovations like the Transformer, sophisticated tokenization strategies, and the use of vast, diverse datasets, these models are actively dismantling long-standing communication barriers. The real-world impact is already profound, transforming global customer service, content creation, and specialized professional fields. While challenges related to low-resource languages and cultural bias remain, the trajectory is clear. The ongoing research and development in the field promise a future where AI serves as a universal translator and a bridge between cultures, fostering greater understanding and collaboration on a global scale. The latest GPT Trends News confirms that we are just at the beginning of this exciting journey.