GPT in Healthcare: How AI is Revolutionizing Cancer Screening and Treatment Planning

The Dawn of a New Era: AI-Powered Precision in Healthcare

The healthcare industry is currently navigating a data tsunami. Clinicians are inundated with an ever-growing volume of patient information, from electronic health records (EHRs) and lab results to genomic data and medical imaging. This information overload creates a significant administrative burden, diverting valuable time away from direct patient care and complex clinical decision-making. However, a transformative shift is underway, driven by advancements in artificial intelligence. The latest developments in GPT in Healthcare News reveal that Generative Pre-trained Transformer (GPT) models, particularly sophisticated versions like GPT-4o, are moving beyond general-purpose chatbots and are now being honed into specialized clinical copilots. These AI assistants are poised to revolutionize workflows in high-stakes fields like oncology, promising to streamline processes, enhance decision support, and ultimately, personalize patient care on an unprecedented scale. This article explores the groundbreaking application of GPT technology in creating cancer screening and pretreatment plans, delving into the underlying technology, implementation challenges, and the profound implications for the future of medicine.

Section 1: Automating Complexity: GPT’s Role in the Oncology Workflow

The journey of a cancer patient is incredibly complex, involving numerous data points that must be meticulously collected, analyzed, and synthesized to form a coherent care plan. A clinician must review a patient’s medical history, family history, lab results, pathology reports, and genetic markers, all while cross-referencing this information with ever-evolving clinical guidelines from bodies like the National Comprehensive Cancer Network (NCCN). This process is not only time-consuming but also prone to human error. The latest GPT Applications News highlights how AI is being deployed to tackle this very challenge, acting as a powerful engine for data synthesis and workflow automation.

From Unstructured Data to Actionable Clinical Insights

One of the greatest strengths of modern language models is their ability to understand and structure unstructured text. A significant portion of a patient’s record consists of narrative notes, specialist reports, and discharge summaries. GPT models can ingest this vast repository of text-based data and extract key clinical concepts, such as diagnoses, medications, allergies, and significant past procedures. By leveraging powerful GPT APIs News, healthcare platforms can build integrations that securely feed this data into the model. The AI then functions as an intelligent pre-processor, organizing the chaotic stream of information into a structured, longitudinal view of the patient’s health. This initial step alone can save clinicians hours of manual chart review, allowing them to focus immediately on higher-level analysis. The ongoing evolution in GPT Architecture News points to even more efficient models capable of handling larger contexts and more diverse data types.

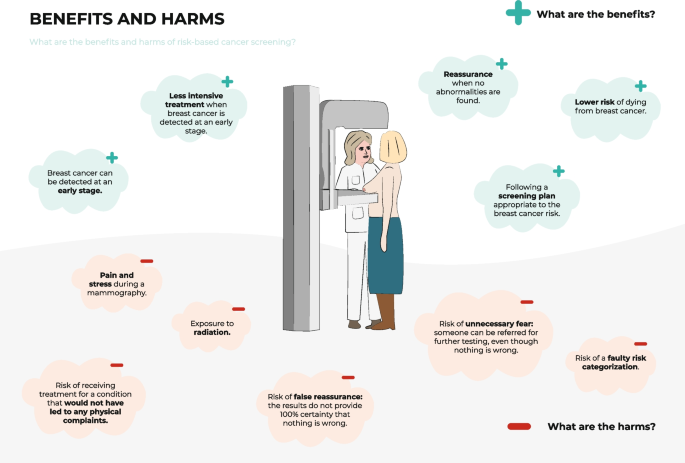

AI-Generated Screening and Pretreatment Plans: A Clinical Copilot

With a structured understanding of the patient’s data, the GPT model can then execute its primary function: generating a preliminary care plan. In the context of cancer screening, the AI can analyze a patient’s risk factors (e.g., age, family history, genetic predispositions, lifestyle factors) and compare them against established guidelines to recommend an appropriate screening schedule (e.g., mammograms, colonoscopies). For a newly diagnosed patient, the model can generate a comprehensive pretreatment checklist. This might include identifying necessary biomarker tests, recommending specific imaging scans, and outlining required specialist consultations before a treatment like chemotherapy or radiation can begin. Crucially, the system is designed to be a copilot, not an autopilot. The AI-generated plan is presented to the clinician as a draft, complete with citations and links back to the source guidelines and patient data it used for its reasoning. This transparency allows the physician to quickly verify the AI’s work, make necessary adjustments based on their clinical judgment, and finalize the plan. This human-in-the-loop approach is a cornerstone of responsible AI deployment, a key topic in recent GPT Ethics News.

Section 2: The Technology Underpinning Medical AI Assistants

The successful application of GPT in a clinical setting is not a simple matter of plugging into a public API. It requires a sophisticated technological stack that prioritizes accuracy, safety, and relevance. The latest OpenAI GPT News and GPT-4 News, particularly around multimodal models like GPT-4o, are paving the way for more powerful and context-aware medical AI tools.

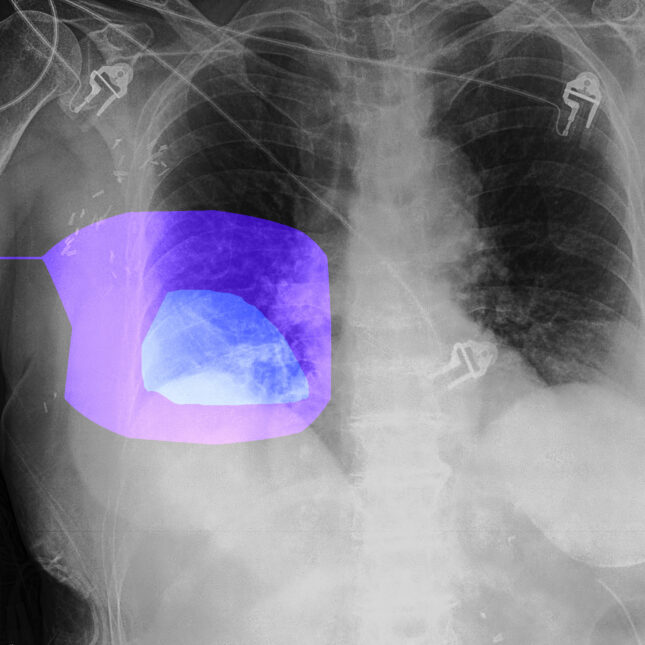

Harnessing Multimodal Capabilities for a Holistic Patient View

Modern healthcare is inherently multimodal. A patient’s story is told through text (doctor’s notes), numbers (lab values), and images (X-rays, CT scans, pathology slides). The advent of models that can process and reason across these different data types is a game-changer. As highlighted in GPT Multimodal News, models like GPT-4o can analyze a radiologist’s written report in conjunction with the actual medical image, or correlate structured lab data with a physician’s narrative assessment. This ability, often discussed in GPT Vision News, allows the AI to form a much more comprehensive and nuanced understanding of the patient’s condition than a text-only model could. As we look toward future GPT-5 News, we can anticipate even tighter integration of these modalities, enabling AI assistants to spot subtle correlations that might be missed by a human reviewer working under pressure.

Ensuring Clinical Relevance with RAG and Fine-Tuning

A general-purpose GPT model, trained on the vast expanse of the internet, lacks the specialized, up-to-the-minute knowledge required for clinical medicine. To make these models safe and effective for healthcare, developers employ two key techniques: Fine-Tuning and Retrieval-Augmented Generation (RAG).

- Fine-Tuning: As covered in GPT Fine-Tuning News, this process involves further training a base model on a curated, high-quality dataset of medical literature, clinical notes, and treatment guidelines. This adjusts the model’s parameters, making it more fluent in medical terminology and better aligned with clinical reasoning patterns. Creating these GPT Custom Models News is essential for specialized applications.

- Retrieval-Augmented Generation (RAG): RAG is a dynamic approach where the model, before answering a prompt, first retrieves relevant information from a trusted, up-to-date knowledge base. In this case, the knowledge base would contain the latest NCCN guidelines, recent clinical trial publications, and specific hospital protocols. This grounds the model’s output in verifiable fact, drastically reducing the risk of “hallucination” and ensuring its recommendations are based on the current standard of care. This technique is a major focus of ongoing GPT Research News.

Section 3: Navigating the Implementation Maze: Privacy, Bias, and Regulation

Deploying powerful AI in healthcare is not just a technical challenge; it is an ethical and regulatory one. The potential benefits are immense, but so are the risks. Building trust with both clinicians and patients requires a proactive and transparent approach to these complex issues.

The Imperative of Data Privacy and HIPAA Compliance

Patient data is among the most sensitive information that exists, and in the United States, it is protected by the Health Insurance Portability and Accountability Act (HIPAA). Any organization developing or deploying a GPT-based healthcare tool must ensure its entire data pipeline is HIPAA compliant. This means using secure, BAA-covered (Business Associate Agreement) cloud infrastructure and APIs. As discussed in GPT Privacy News, this involves robust data encryption, strict access controls, and comprehensive audit trails. The process of de-identifying data for model training and analysis is a critical step, but it must be done with extreme care to prevent re-identification. The evolving landscape of GPT Regulation News will undoubtedly place even greater emphasis on these privacy-preserving techniques.

Addressing Algorithmic Bias and Ensuring Health Equity

AI models are trained on data, and if that data reflects existing biases in society and healthcare, the model will learn and potentially amplify them. For example, if clinical trial data historically underrepresents certain demographic groups, an AI trained on that data might generate less effective treatment recommendations for patients from those groups. Acknowledging and mitigating this is a central theme in GPT Bias & Fairness News. Best practices include carefully auditing training datasets for demographic balance, implementing fairness-aware machine learning algorithms, and continuously monitoring the model’s performance across different patient populations post-deployment. Achieving health equity requires a conscious and sustained effort to build fairness into the very architecture of these AI systems.

The Human-in-the-Loop: A Non-Negotiable Best Practice

Perhaps the most critical principle in medical AI is maintaining human oversight. These tools should be framed as GPT Assistants News or copilots, designed to augment, not replace, the expertise of a qualified clinician. The final medical decision must always rest with a human professional who can apply context, empathy, and nuanced judgment that an algorithm cannot. The AI’s role is to handle the cognitive heavy lifting of data synthesis and guideline recall, freeing up the clinician to focus on the patient. This collaborative model ensures patient safety and leverages the best of both human and artificial intelligence. It is the only responsible path for GPT Deployment News in such a critical field.

Section 4: The Broader Horizon and Recommendations

While the application in cancer care is a powerful leading example, the potential for GPT technology spans the entire healthcare ecosystem. From simplifying administrative tasks to accelerating research, these models are set to become an indispensable part of the modern medical toolkit.

Beyond Oncology: A Wave of Healthcare Applications

The same core technology used for cancer planning can be adapted for numerous other use cases, a trend reflected in the broader GPT Ecosystem News.

- Administrative Automation: GPT can draft pre-authorization requests for insurance companies, summarize patient-doctor conversations into clinical notes, and manage patient scheduling, significantly reducing administrative overhead.

- Clinical Trial Matching: Models can scan a patient’s entire medical record and match them with eligible clinical trials in minutes—a process that currently takes hours of manual work.

- Patient Education: AI can generate personalized, easy-to-understand summaries of complex medical conditions and treatment plans, improving patient engagement and health literacy, a key area of GPT in Education News.

- Medical Coding and Billing: AI can analyze clinical notes to suggest accurate billing codes, improving revenue cycle management and reducing compliance risks.

Recommendations for Healthcare Innovators

For healthcare organizations looking to harness this technology, a strategic approach is essential.

- Start with a Focused Pilot: Begin with a well-defined problem, such as automating a specific administrative workflow, to prove the concept and build institutional buy-in before tackling more complex clinical applications.

- Prioritize Data Governance: Establish a robust framework for managing data privacy, security, and quality. A successful AI implementation is built on a foundation of clean, well-structured, and secure data.

- Invest in Clinician Training: Onboarding and training are critical. Clinicians need to understand how the AI works, its limitations, and how to use it effectively and safely as part of their workflow.

- Embrace an Iterative Approach: The field of AI is evolving rapidly. Adopt an agile methodology that allows for continuous monitoring, evaluation, and improvement of AI tools based on real-world performance and user feedback. This is a key theme in GPT Trends News.

Conclusion: Charting the Future of AI-Assisted Medicine

The integration of advanced GPT models into clinical workflows marks a pivotal moment in the evolution of healthcare. As we’ve seen with emerging applications in cancer care, this technology is no longer theoretical; it is a practical tool with the potential to profoundly impact patient outcomes and clinician well-being. By automating the laborious task of data synthesis, AI copilots can reduce administrative burdens, minimize errors, and accelerate the delivery of personalized, evidence-based care. However, the path forward requires careful navigation of significant ethical, privacy, and regulatory challenges. The ultimate success of GPT in Healthcare News will depend on a steadfast commitment to a human-in-the-loop model, where technology empowers, rather than replaces, the invaluable expertise and compassion of medical professionals. The future of medicine will be a collaborative one, where human intelligence is augmented by artificial intelligence to achieve a new standard of care.