From Prompt to Product: A Developer’s Guide to Building Applications with GPT Assistants

The Dawn of a New Development Paradigm

The world of software development is undergoing a seismic shift, one that is fundamentally altering the timeline from concept to deployment. What once took months of meticulous coding, complex architecture design, and iterative debugging can now be prototyped and even shipped in a matter of days. This acceleration is not due to a new programming language or framework, but a new paradigm altogether: building with AI. Specifically, the rise of sophisticated tools like OpenAI’s GPT Assistants is empowering developers to transition from writing explicit logic to orchestrating intelligent agents. This “prompt-to-product” workflow moves beyond simple API calls for text generation; it’s about creating stateful, tool-using applications that can reason, plan, and execute complex tasks. The latest GPT-4 News isn’t just about model capabilities, but about the ecosystem being built around them. This article provides a comprehensive technical guide for developers looking to harness this power, transforming conversational prompts into fully functional, real-world products.

The Anatomy of a GPT Assistant: More Than Just a Chatbot

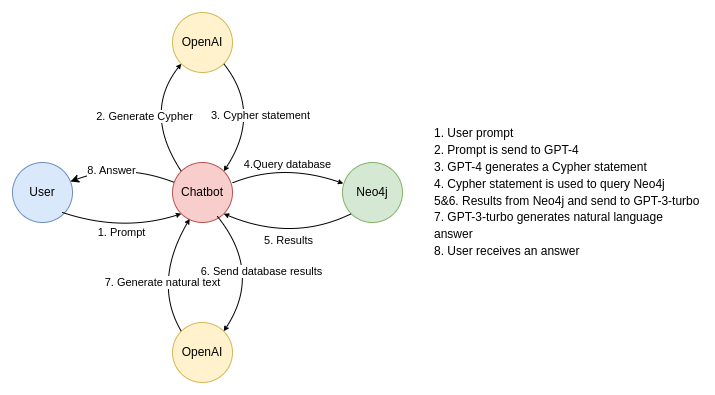

To appreciate the “prompt-to-product” revolution, it’s crucial to understand what sets GPT Assistants apart from traditional interactions with large language models. A standard API call to a model like GPT-4 is stateless; each request is an isolated event with no memory of past interactions. This forced developers to build and manage their own complex state-management systems. The latest GPT APIs News highlights a significant evolution with the introduction of the Assistants API, which addresses these challenges directly through a more robust architecture.

Beyond Stateless API Calls: The Power of Threads

The core innovation of the Assistants API is the concept of a “Thread.” A Thread is a persistent conversation session that automatically manages message history. When a user interacts with an Assistant within a Thread, the entire context of the conversation is maintained without the developer needing to manually collect, truncate, and resubmit the history with every API call. This seemingly simple feature is a game-changer. It offloads a significant engineering burden, allowing developers to focus on application logic rather than conversational plumbing. This persistent context is essential for building agents that can handle multi-step tasks, remember user preferences, and engage in coherent, long-running dialogues, a key topic in recent ChatGPT News.

Intelligent Tool Usage: Code Interpreter and Function Calling

An Assistant’s true power is unlocked when it can interact with the outside world. The API provides two primary mechanisms for this: Code Interpreter and Function Calling. Code Interpreter gives the Assistant a sandboxed Python execution environment, allowing it to run code, analyze data, create visualizations, and process files. More importantly for custom applications, Function Calling allows developers to define their own tools. You can describe a custom function (e.g., fetch_customer_data(customer_id) or send_email(to, subject, body)) to the Assistant. When the model determines that one of these tools is needed to fulfill a user’s request, it doesn’t execute the code itself. Instead, it outputs a structured JSON object containing the function name and arguments. Your application code then intercepts this JSON, executes the actual function, and returns the result to the Assistant to continue its process. This mechanism effectively gives the AI “hands,” enabling a vast array of GPT Integrations News and real-world actions.

Knowledge on Demand: Retrieval Augmented Generation (RAG)

One of the limitations of base GPT models is their knowledge cutoff and lack of access to private or proprietary information. The Assistants API tackles this with built-in Retrieval Augmented Generation (RAG). Developers can upload files (PDFs, text documents, etc.) and associate them with an Assistant. When a user asks a question, the Assistant can automatically search these documents for relevant information and use it to formulate a more accurate and context-aware response. This feature is a cornerstone of the latest GPT Custom Models News, as it allows for the creation of domain-specific experts without the complexity and cost of traditional fine-tuning.

The “Prompt-to-Product” Workflow: A Practical Blueprint

With an understanding of the components, we can now map out the practical workflow for turning an idea into a product. This process is iterative and highly conversational, often starting within a simple chat interface and evolving into a robust, automated system. The focus shifts from low-level implementation details to high-level instruction and tool design.

Step 1: Ideation and Conversational Prototyping

Every application begins with an idea. Instead of immediately jumping to a code editor, the modern workflow begins in a chat playground. You can start a conversation with a model like GPT-4, describing your goal. For example: “I want to build an app that finds the top 3 AI news articles each morning, summarizes them, and sends me a digest.” Through this dialogue, you can refine the logic, define the required steps, and even have the AI generate the initial boilerplate code for the functions it would need.

Step 2: Defining the Assistant’s Core Identity and Instructions

Once the logic is clear, you formalize it in the Assistant’s `instructions`. This is the system prompt that governs its personality, goals, constraints, and how it should use its tools. This is the most critical step in the process. A well-crafted instruction set is the difference between a confused, unreliable bot and a precise, effective agent. For our news app, the instructions might include: “You are a tech news analyst. Your goal is to find the most relevant AI news daily. You must use the `fetch_news` tool to get articles, then the `summarize_text` tool for each one. Prioritize news related to GPT Models News and GPT Research News. Format the final output as a clean, bulleted list.”

Step 3: Equipping the Assistant with Tools (Function Calling)

Here, you translate the required actions into concrete functions in your application’s codebase and describe them to the Assistant using a JSON schema. For the news curator, you would define functions like:

fetch_news(query: str, count: int): Calls a news API (like NewsAPI or a custom RSS scraper).summarize_text(article_url: str): Scrapes the article content and uses a separate model call to generate a concise summary.send_digest(content: str, recipient: str): Uses an email service like SendGrid or AWS SES to send the final report.

The Assistant now has the capabilities it needs to execute the workflow.

Case Study: Building an Automated News Curator

Let’s tie it all together. To build our news app, a developer would create an Assistant with the instructions from Step 2 and the functions from Step 3. The application logic would be a simple script, perhaps running on a serverless function or a virtual machine. This script is triggered by a cron job every morning at 8 AM.

- The cron job initiates a “Run” on a Thread with a simple prompt: “Generate today’s AI news digest.”

- The Assistant, following its instructions, decides to call the `fetch_news` function with a query like “AI breakthroughs” or “GPT-5 News”.

- Your application code executes the real API call to the news service and returns the list of articles to the Assistant.

- The Assistant processes the list and decides to call the `summarize_text` function for the top 3 URLs.

- Your code executes these calls, returning the summaries.

- Finally, the Assistant formats the summaries into a digest and calls the `send_digest` function with the formatted content.

- Your code sends the email.

This entire application, which involves external API calls, data processing, and notifications, can be built with a surprisingly small amount of code, primarily focused on the tool implementations and the orchestration script.

Navigating the Technical Landscape: Best Practices and Common Pitfalls

While the “prompt-to-product” workflow is powerful, it introduces a new set of technical challenges and considerations. Success requires a shift in mindset from traditional software engineering to what might be called “AI orchestration.” This is a central theme in ongoing discussions about GPT Deployment News and the evolution of MLOps.

Mastering the System Prompt: The Art of Instruction

The Assistant’s instructions are its source code. Vague or ambiguous instructions lead to unpredictable behavior. Best practices include being explicit, providing examples of desired output formats, and clearly defining constraints (e.g., “Never use information outside of the provided documents,” “Always call the `verify_user` function before accessing sensitive data”). Continuous refinement of this prompt, a process known as prompt engineering, is a core activity in developing with assistants.

Designing Robust Functions for AI Consumption

When defining functions for an Assistant, clarity is paramount.

- Atomic Operations: Design functions that do one thing well. Instead of a single monolithic `process_order` function, break it down into `check_inventory`, `process_payment`, and `schedule_shipping`.

- Descriptive Naming and Docstrings: The function name and parameter descriptions are all the model has to understand what the tool does. `get_stock_price(ticker_symbol: str)` is far better than `fetch_data(s: str)`.

- Error Handling: Your functions must return clear, descriptive error messages. If an API call fails, returning “Error: API key invalid” gives the Assistant a chance to either retry or inform the user, whereas a generic “Failed” message will leave it stuck.

Managing Cost, Latency, and Throughput

The convenience of the Assistants API comes with a cost. A single user prompt can trigger multiple model calls (the main reasoning step, plus potential calls for function generation), Code Interpreter usage, and retrieval searches. This can lead to higher costs and increased latency compared to a single, direct API call. Monitoring token usage is critical. For high-throughput applications, exploring GPT Optimization News, such as model quantization or distillation, or using smaller, fine-tuned models for specific sub-tasks, may be necessary. Understanding GPT Latency & Throughput News is vital for building responsive, production-grade systems.

Addressing GPT Safety News and Security Concerns

Giving an AI the ability to execute code or call external APIs introduces significant security risks. This is a major focus of GPT Ethics News and GPT Regulation News. Never trust user input directly. Sanitize any data passed from the user prompt into your function calls to prevent injection attacks. Implement strict permissioning and sandboxing. The Assistant should only have access to the specific tools and data it absolutely needs. For example, an Assistant that writes marketing copy should not have access to a function that can delete user accounts. This principle of least privilege is more important than ever in the age of AI agents.

The Broader Impact: Reshaping the Developer Ecosystem

The move towards prompt-driven development is not an incremental change; it’s a fundamental reshaping of the software landscape and the GPT Ecosystem News. It democratizes development, allowing those with great ideas but less traditional coding experience to build powerful applications. It also pushes the boundaries of what’s possible, paving the way for more autonomous and intelligent systems.

The Rise of Autonomous GPT Agents

The Assistants API is a significant step towards fully autonomous agents. As models become more capable of reasoning and planning, we will see agents that can take a high-level goal (e.g., “Plan and book a vacation to Italy for next June”) and independently execute the entire multi-step process of researching flights, booking hotels, and creating an itinerary. This evolution is the central topic of GPT Agents News and represents the next frontier in AI applications, with implications for everything from personal productivity to enterprise automation.

Implications for GPT Deployment and MLOps

The development lifecycle is changing. The focus is shifting from training and fine-tuning massive models to prompt engineering, tool integration, and agent monitoring. The new MLOps stack will include tools for versioning prompts, testing tool integrations, and monitoring agent behavior for unexpected actions or “hallucinations.” This shift is reflected in the growing number of GPT Platforms News and GPT Tools News designed specifically for orchestrating and managing AI agents.

The Road Ahead: GPT-5 and the Future of AI Development

Looking ahead, the trajectory is clear. Future models, hinted at in speculative GPT-5 News, will likely possess even stronger reasoning, planning, and self-correction capabilities. The expansion of GPT Multimodal News means that these agents will be able to process and generate not just text, but also images, audio, and video, as seen in early GPT Vision News. An assistant could soon be able to “watch” a video tutorial and then write the code to replicate its outcome. This continuous improvement in core model architecture and capabilities will make the “prompt-to-product” workflow exponentially more powerful, further blurring the line between human instruction and machine execution.

Conclusion: The Orchestrator’s Era

We are entering a new era of software development where the developer’s primary role evolves from a writer of code to an orchestrator of intelligence. The “prompt-to-product” workflow, powered by technologies like the GPT Assistants API, represents a profound leap in productivity and capability. By mastering the art of instruction, designing robust tools, and understanding the associated risks, developers can now build and deploy sophisticated, AI-powered applications at a speed previously unimaginable. The barrier between a creative idea and a functional product has never been lower. For those willing to embrace this new paradigm, the opportunity is not just to build faster, but to build smarter, creating applications that are truly dynamic, responsive, and intelligent.