The Next Paradigm Shift in AI: Securing LLM Inference with Privacy-Preserving Computation

Introduction: The AI Privacy Paradox

The rapid evolution of Generative Pre-trained Transformer (GPT) models has unlocked unprecedented capabilities across nearly every industry. From sophisticated GPT-4 applications to the widespread use of ChatGPT, these Large Language Models (LLMs) are redefining content creation, data analysis, and human-computer interaction. However, this power comes with a critical trade-off: data privacy. To leverage the intelligence of a powerful LLM, users and organizations must often send sensitive, proprietary, or personal data to third-party cloud servers. This creates a significant privacy paradox, hindering adoption in regulated fields like healthcare, finance, and legal tech, where data confidentiality is non-negotiable. This is a central topic in the latest GPT Privacy News and discussions around GPT Regulation News.

For years, the industry has grappled with this challenge. Solutions like data anonymization are often insufficient, while privacy-enhancing technologies (PETs) like Fully Homomorphic Encryption (FHE) have been too slow for practical, real-time LLM inference. A new breakthrough, however, is emerging at the intersection of cryptography and artificial intelligence. This revolutionary approach leverages Multi-Party Computation (MPC) to enable secure, private, and remarkably fast LLM inference. It promises to resolve the privacy paradox, potentially unlocking the full potential of GPT models for the most sensitive use cases and heralding a new era of secure AI. This development is a cornerstone of current GPT Future News and is set to dominate GPT Trends News for the foreseeable future.

Section 1: Understanding the Breakthrough: MPC-Powered LLM Inference

At its core, the challenge is to perform complex computations on data without ever seeing the data itself. The latest advancements achieve this by applying a mature cryptographic technique, Multi-Party Computation (MPC), to the architecture of LLM inference. This represents a significant update in GPT Architecture News and GPT Inference News.

What is Multi-Party Computation (MPC)?

MPC is a cryptographic protocol that allows multiple, non-trusting parties to jointly compute a function over their private inputs without revealing those inputs to one another. Imagine several colleagues wanting to calculate their average salary without telling anyone their individual income. MPC provides a mathematical framework to achieve exactly that. In the context of LLMs, the “parties” are a distributed network of compute nodes, and the “function” is the inference process of a model like GPT-3.5 or GPT-4. The user’s sensitive query is the private input. The nodes process encrypted or “secret-shared” fragments of the data, performing the necessary calculations collaboratively without any single node ever having access to the complete, unencrypted query.

How it Differs from Existing Privacy Solutions

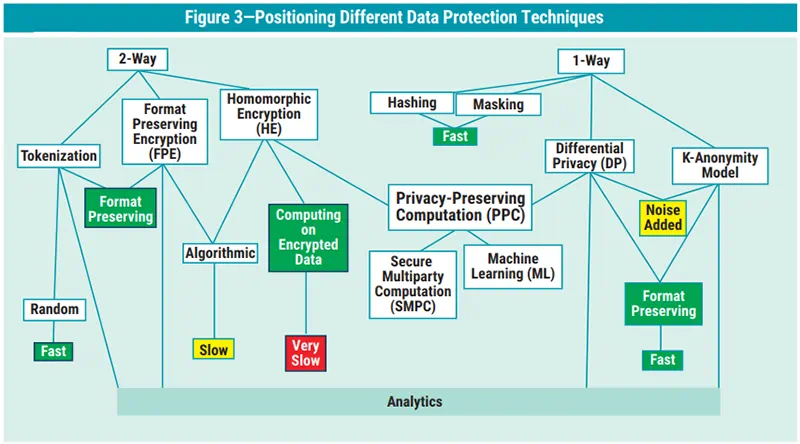

To appreciate the significance of this development, it’s crucial to compare it with other privacy-preserving methods. The most discussed alternative has been Homomorphic Encryption (HE), which allows computation on encrypted data. While powerful, HE has historically been plagued by immense computational overhead, making real-time inference for massive models like GPT-4 prohibitively slow.

The new MPC-based approach offers a paradigm shift. Recent benchmarks and research papers indicate that this method can be significantly faster—potentially 3 to 8 times faster—than existing HE-based solutions for LLM inference. This leap in GPT Efficiency News is what makes the technology viable for real-world applications. Instead of waiting minutes for a response, users can experience near-real-time interactions, a critical factor for usability in GPT Chatbots News and GPT Assistants News. This addresses major concerns around GPT Latency & Throughput News, making private AI not just possible, but practical.

Key Architectural Points

- Data Splitting: The user’s input (e.g., a confidential legal document) is split into multiple encrypted “shares.”

- Distributed Computation: These shares are sent to a network of independent compute nodes. The LLM’s model weights can also be shared across this network.

- Blind Processing: The nodes perform the inference calculations (attention mechanisms, matrix multiplications) on their respective shares. They are effectively “blind,” as no single node can reconstruct the original query or the model’s full intelligence.

- Result Reconstruction: The resulting output shares are sent back to the user, who is the only one capable of combining them to reveal the final, coherent answer from the LLM.

This distributed, trustless architecture fundamentally changes the security model. Users no longer need to place absolute trust in a single AI provider. Instead, trust is distributed across a network, with security guaranteed by cryptographic proofs. This is a major topic in GPT Safety News and discussions about the broader GPT Ecosystem News.

Section 2: A Deeper Dive into the Technical Mechanics

Implementing MPC for LLM inference is a complex engineering feat that involves novel approaches to cryptography, distributed systems, and AI model architecture. This technical progress is a hot topic in GPT Research News and is influencing the development of next-generation GPT Inference Engines.

The Journey of a Private Query

Let’s trace the lifecycle of a query in this system to understand the mechanics:

- Tokenization and Secret Sharing: A user types a sensitive query. On the client side, the query is first converted into tokens, a standard step in any LLM interaction (a key area of GPT Tokenization News). These tokens are then cryptographically split into multiple secret shares using a technique like Shamir’s Secret Sharing. No individual share contains meaningful information.

- Distribution to Nodes: The secret shares are distributed across the MPC network. For the system to be secure, a certain threshold of these nodes must not collude. For example, in a network of 10 nodes, the system might be secure as long as any 5 or fewer nodes do not collude.

- Secure Computation: The core innovation lies here. The LLM inference process is a series of mathematical operations, primarily matrix multiplications and non-linear activation functions (like ReLU). Researchers have developed specialized MPC protocols to perform these operations securely on the secret-shared data. This requires significant optimization, a subject of ongoing GPT Optimization News.

- Reconstruction of the Final Layer: After passing through all the layers of the neural network, the final output is also in a secret-shared format. These output shares are sent back to the original user.

- Revealing the Answer: The user’s client combines the shares to reconstruct the final result—the LLM’s response. The compute nodes learn nothing about the query or the response.

Hardware and Network Considerations

This architecture has specific infrastructure requirements, a key topic in GPT Hardware News. While it doesn’t necessarily demand more raw compute power than traditional inference, it places a heavy emphasis on low-latency, high-bandwidth networking between the nodes. The constant communication required for the MPC protocols means that network performance is a critical bottleneck. The nodes themselves need to be powerful enough to handle both the cryptographic overhead and the standard LLM computations. This has implications for GPT Deployment News, suggesting a shift towards specialized, geographically co-located clusters for private AI inference. It also opens new avenues for GPT Edge News, where a trusted set of edge devices could form an MPC network to process local data privately.

Section 3: Real-World Applications and Industry Impact

The theoretical benefits of private inference are clear, but its true value is realized in practical, real-world scenarios. This technology is poised to revolutionize how high-stakes industries interact with AI, creating a wave of new GPT Applications News.

Case Study 1: GPT in Healthcare News

A major hospital wants to use a powerful multimodal LLM, a subject of GPT Vision News, to assist radiologists. The model needs to analyze a patient’s medical scans along with their entire electronic health record (EHR) to flag potential anomalies that a human might miss. Under the current paradigm, this would require sending immense amounts of Protected Health Information (PHI) to a third-party API, a clear violation of HIPAA. With MPC-powered inference, the patient’s data and scans can be processed by the LLM without ever leaving the hospital’s cryptographic control. The model provides its expert analysis, but the AI provider sees only encrypted computations. This enhances diagnostic accuracy while upholding the highest standards of patient privacy.

Case Study 2: GPT in Finance News

An investment bank wants to develop a custom GPT agent to analyze its confidential M&A deal flow, internal research, and client communications to identify new strategic opportunities. The data involved is among the most sensitive in the financial world. Using a private inference model, the bank can fine-tune and run a proprietary LLM (relevant to GPT Fine-Tuning News) on this data. The MPC framework ensures that even if the compute nodes are run by different cloud providers, the bank’s trade secrets and client data remain mathematically secured. This prevents data leakage and provides a competitive edge.

Case Study 3: GPT in Legal Tech News

A law firm is handling a massive e-discovery case involving millions of documents protected by attorney-client privilege. They need to use an advanced GPT Code Models News-style LLM to parse, summarize, and categorize these documents. Sending these documents to a standard API is unthinkable. By deploying a private inference solution, the firm can leverage the power of AI to drastically speed up their workflow, identify key evidence, and build a stronger case, all while guaranteeing that confidential client information is never exposed.

Beyond these core areas, applications abound in marketing (analyzing private customer data for GPT in Marketing News), content creation (using proprietary data for GPT in Content Creation News), and even creative fields (GPT in Creativity News), wherever the source data is valuable and sensitive.

Section 4: Benefits, Challenges, and Best Practices for Adoption

While MPC-powered inference is a monumental step forward, its adoption requires a clear-eyed understanding of its advantages, limitations, and the best practices for implementation. This is crucial for organizations creating their strategy around the latest GPT Platforms News and GPT Tools News.

The Unmistakable Benefits (Pros)

- Unprecedented Confidentiality: It provides a mathematical guarantee of data privacy, moving beyond mere policy-based promises.

- Regulatory Compliance: It unlocks the use of powerful AI in GDPR, HIPAA, and CCPA-regulated environments.

- Intellectual Property Protection: Companies can use LLMs on their most valuable trade secrets without fear of exposure.

- Competitive Performance: By being significantly faster than alternatives like HE, it makes private AI practical for interactive applications. This is a major finding in recent GPT Benchmark News.

The Implementation Hurdles (Cons & Pitfalls)

- System Complexity: Deploying and managing a distributed network of MPC nodes is far more complex than calling a simple REST API.

- Computational Overhead: While faster than HE, it is still slower and more resource-intensive than non-private, plaintext inference. A cost-benefit analysis is essential.

- Network Sensitivity: The system’s performance is highly dependent on the network quality between nodes. High latency can degrade the user experience.

- Ecosystem Maturity: The tools and platforms for building and deploying these solutions are still emerging, representing the cutting edge of the GPT Integrations News landscape.

Tips and Considerations for C-Suite and Developers

- Identify the Right Use Case: Don’t apply this powerful technology to non-sensitive data. Focus on high-value, high-risk problems where the cost of a data breach is immense.

- Start with a Hybrid Approach: Consider a model where non-sensitive parts of a task are handled by standard LLMs and only the sensitive components are routed through the private inference network.

- Evaluate the Trust Model: Understand the security guarantees of the specific MPC protocol. How many nodes must collude to break the privacy? Who operates the nodes?

- Factor in Total Cost of Ownership: The cost includes not just the computation but also the network infrastructure and the specialized talent required to manage the system.

Addressing these challenges is key to moving from research to widespread production, a central theme in GPT Deployment News and GPT Open Source News, as the community works to build more accessible tools.

Conclusion: The Dawn of Trustworthy AI

The integration of Multi-Party Computation with Large Language Models is more than an incremental improvement; it is a foundational shift in how we approach artificial intelligence. It directly confronts the most significant barrier to AI adoption in sensitive domains: the lack of data privacy and security. By enabling fast, secure, and private inference, this technology transforms LLMs from powerful but risky third-party tools into trustworthy computational partners.

This breakthrough signals the beginning of a new chapter in the OpenAI GPT News and the broader AI landscape. As this technology matures and becomes more accessible, it will unlock a wave of innovation in healthcare, finance, law, and beyond. It moves the conversation from “What can AI do?” to “How can we use AI safely and ethically?” The future of AI is not just about building larger or more capable models; it’s about building a secure, private, and trustworthy foundation upon which we can deploy them. The era of private AI is no longer a distant vision; it is arriving now.