Navigating the Maze: A Deep Dive into GPT Regulation and the Future of AI Safety

Introduction

The rapid proliferation of Generative Pre-trained Transformer (GPT) models has marked a watershed moment in artificial intelligence. From powering sophisticated chatbots to revolutionizing content creation, the capabilities of these systems are expanding at an exponential rate. However, this meteoric rise is accompanied by a growing chorus of concern from technologists, ethicists, and governments worldwide. The very power that makes these models so transformative also introduces complex risks, including the potential for generating harmful content, perpetuating bias, and enabling malicious activities on an unprecedented scale. As a result, the global conversation has shifted from “if” we should regulate this technology to “how.” The latest GPT Regulation News is no longer confined to academic papers; it’s shaping national strategies and international treaties. This article provides a comprehensive technical analysis of the current state of GPT regulation, exploring the underlying technological challenges, the divergent global policy approaches, and the best practices that developers and organizations must adopt to navigate this complex and rapidly evolving landscape. We will delve into the critical intersection of innovation and oversight, examining how the future of AI is being written in the language of both code and law.

Section 1: The Rationale and Current Landscape of AI Regulation

The push for AI regulation is not a hypothetical exercise; it is a direct response to the demonstrated and potential risks inherent in large-scale AI systems. The core issue stems from the dual-use nature of powerful GPT models. While they can be used for immense good, they can also be weaponized. Internal safety evaluations and “red teaming” exercises on early, less-aligned models have revealed unsettling capabilities, such as the ability to devise sophisticated manipulation tactics, generate instructions for dangerous activities, or create highly convincing disinformation at scale. This has brought GPT Safety News and GPT Ethics News to the forefront of public discourse.

Key Drivers for Regulation

Several critical risk areas are driving the global regulatory momentum:

- Misinformation and Disinformation: The ability of models to generate fluent, contextually aware, and persuasive text makes them powerful tools for creating fake news, propaganda, and social engineering campaigns. This poses a direct threat to democratic processes and social cohesion.

- Bias and Fairness: GPT models are trained on vast swathes of internet data, which inevitably contains historical and societal biases. Without careful mitigation, these models can perpetuate and even amplify harmful stereotypes in applications ranging from hiring tools to loan approvals, making GPT Bias & Fairness News a critical area of research.

- Privacy Concerns: The training data for these models can inadvertently include personally identifiable information (PII). There are documented cases of models regurgitating private data they were trained on, leading to significant GPT Privacy News and concerns over data governance.

- Malicious Use and Dual-Use Capabilities: Beyond disinformation, there are concerns about models being used to write malicious code, plan cyberattacks, or provide expertise in dangerous domains. The latest OpenAI GPT News often includes updates on their efforts to curb such misuse through refined safety policies for their GPT APIs News.

This evolving threat landscape has forced a reactive but necessary response from policymakers. The focus is on creating frameworks that can foster innovation while establishing guardrails to protect the public. The entire GPT Ecosystem News is now colored by these regulatory discussions, impacting everything from small startups using GPT Plugins News to large enterprises building on custom models.

Section 2: The Technical Gauntlet: Why Regulating GPT Models is Inherently Difficult

Crafting effective AI regulation is a formidable challenge due to the unique technical characteristics of GPT models. Unlike traditional software, where logic is explicitly programmed, the behavior of a neural network is emergent and often unpredictable. This creates several deep-seated technical hurdles for regulators and developers alike.

The “Black Box” Problem and Emergent Capabilities

At the heart of the issue is the interpretability problem. The complex web of billions of parameters within a model like GPT-4 makes it nearly impossible to trace exactly why a specific input produces a particular output. This “black box” nature complicates audits and accountability. Furthermore, as models scale up—a key topic in GPT Scaling News—they develop “emergent capabilities” that were not explicitly trained for or anticipated by their creators. The latest GPT Architecture News highlights efforts to build more transparent models, but we are far from a complete solution. This unpredictability means that even with rigorous pre-deployment testing, a model could exhibit dangerous behavior in a novel, real-world scenario.

The Fragility of AI Safety Alignment

Model developers invest heavily in “safety alignment,” a process that uses techniques like Reinforcement Learning from Human Feedback (RLHF) to steer a model away from harmful outputs. This is a central theme in GPT-4 News and a major focus for the upcoming GPT-5 News. However, this alignment is notoriously brittle. Adversarial attacks, also known as “jailbreaks,” can use clever prompting to bypass these safety filters. The challenge is immense: defenders must patch every possible vulnerability, while an attacker only needs to find one. This is particularly concerning for developers using GPT Fine-Tuning News to create specialized versions, as the fine-tuning process can inadvertently weaken the original safety alignment. The open-source community, a vibrant source of GPT Open Source News, faces an even greater challenge in enforcing safety standards across countless forks and custom-trained models.

The Intersection of Performance and Safety

In the real world, model deployment involves trade-offs. Techniques like GPT Quantization News and GPT Distillation News are used to compress large models, making them faster and cheaper to run, which is critical for GPT Inference News and low-latency applications. This is essential for deploying models on consumer devices (GPT Edge News) or in high-throughput environments. However, these GPT Optimization News techniques can sometimes degrade a model’s performance in subtle ways, potentially affecting its safety alignment or its ability to adhere to complex rules. Ensuring that an optimized model remains as safe as its full-sized counterpart is a significant and ongoing area of GPT Research News, with new safety GPT Benchmark News suites being developed to validate performance post-compression.

Section 3: A World Divided: Global Approaches to GPT Governance

As nations grapple with the implications of powerful AI, a patchwork of regulatory strategies is emerging across the globe. These differing philosophies reflect unique political, economic, and cultural priorities, creating a complex compliance landscape for developers and businesses operating internationally.

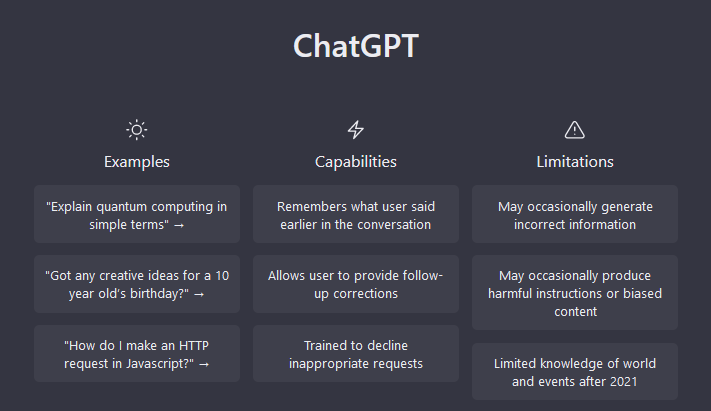

The European Union: The Risk-Based AI Act

The EU has taken a comprehensive, rights-focused approach with its landmark AI Act. This regulation categorizes AI applications into risk tiers: unacceptable, high, limited, and minimal. General-purpose AI models like those discussed in ChatGPT News are designated as systemic risks, requiring stringent transparency, data governance, and risk management obligations from their providers. Applications in sensitive sectors, a major topic for GPT in Healthcare News and GPT in Legal Tech News, will likely fall under the “high-risk” category, demanding rigorous conformity assessments before they can be deployed. This framework places a heavy compliance burden on developers but aims to create a trusted and ethical AI market.

The United States: An Innovation-First, Sector-Specific Strategy

The U.S. has adopted a more pro-innovation stance, characterized by the White House’s Executive Order on AI and the NIST AI Risk Management Framework. The approach relies heavily on voluntary commitments from leading AI labs and encourages the development of industry standards rather than imposing broad, top-down legislation. This strategy aims to maintain the country’s competitive edge, a key point in discussions around GPT Competitors News. Regulation is expected to be more sector-specific, with agencies like the FDA and SEC developing rules for AI use in their respective domains, such as GPT in Finance News. This creates a more fragmented but potentially more flexible environment for those building GPT Applications News.

China: State-Centric Control and Content Regulation

China’s approach is distinctly state-centric, prioritizing social stability and national security. Its regulations require providers of generative AI services to register their algorithms with the state and impose strict liability for the content generated. The rules mandate that AI-generated content must adhere to “core socialist values,” giving the government significant control over the information landscape. This has profound implications for GPT Multilingual News and cross-border data flows, impacting how global GPT Platforms News can operate within the country.

This global divergence means that a company developing GPT Agents News or deploying GPT Chatbots News for a worldwide audience must navigate a maze of conflicting legal requirements, making international compliance a significant operational and technical challenge.

Section 4: The Path Forward: Best Practices for Developers and Policymakers

Navigating the nascent world of AI regulation requires a proactive and collaborative approach. Both the creators of AI technology and the policymakers shaping its use share the responsibility for building a safe and beneficial AI future. Here are actionable recommendations for both groups.

Recommendations for Developers and Organizations

- Embrace “Safety by Design”: Do not treat safety as an afterthought. Integrate ethical reviews, bias testing, and red teaming into every stage of the model lifecycle, from curating GPT Datasets News to final GPT Deployment News. Utilize a suite of GPT Tools News designed for model evaluation and monitoring.

- Invest in Robust Monitoring and Governance: Once a model is deployed, its behavior must be continuously monitored for performance degradation, emergent biases, or misuse. This involves setting up sophisticated logging and alerting systems to track GPT Latency & Throughput News alongside safety metrics.

- Prioritize Transparency: Create and maintain detailed documentation, such as model cards and datasheets, that clearly outline a model’s capabilities, limitations, training data, and intended use cases. This is becoming a standard expectation for those using GPT Integrations News in commercial products.

- Stay Informed and Agile: The regulatory landscape is in flux. Businesses building applications in areas like GPT in Marketing News or GPT in Content Creation News must stay abreast of legal developments to ensure ongoing compliance.

Recommendations for Policymakers

- Foster Technical Expertise: Regulators must develop deep technical expertise to create rules that are effective and not easily circumvented. This involves close collaboration with the AI research community.

- Promote International Standards: Given the borderless nature of AI, international cooperation on safety benchmarks, testing protocols, and risk definitions is crucial to avoid a fractured and inefficient global regulatory system.

- Balance Innovation and Oversight: Regulation should be agile and risk-based, creating strong guardrails for high-stakes applications while providing sandboxes and lighter-touch rules for research and low-risk innovation, especially to support the GPT Open Source News community.

Conclusion

The conversation around GPT regulation has reached a critical inflection point. The undeniable power of these models brings with it a profound responsibility to manage their risks. As we’ve seen, this is not merely a policy debate but a deep technical challenge rooted in the very nature of modern AI architecture. The path forward is not a simple choice between innovation and regulation; it is about weaving them together. For developers, this means embedding safety and ethics into the core of their development process. For policymakers, it requires crafting intelligent, adaptive, and globally-aware frameworks that protect the public without stifling progress. The ongoing developments in GPT Trends News and the highly anticipated GPT Future News will continue to test our ability to govern this transformative technology wisely. Ultimately, building a future where AI is both powerful and safe is a shared responsibility, demanding collaboration, foresight, and a steadfast commitment to human values.