The Transparency Paradox: Navigating the Murky Waters of GPT Ethics and Safety News

The world of artificial intelligence is moving at a breathtaking pace. Every week brings fresh GPT Models News, with breakthroughs in capability that were once the stuff of science fiction. From the versatile GPT-4 to the whispers surrounding the upcoming GPT-5 News, large language models (LLMs) are being woven into the fabric of our digital lives. They power everything from sophisticated GPT Chatbots News to groundbreaking applications in science and industry. Yet, as these models become more powerful and ubiquitous, a troubling paradox has emerged: the more capable they become, the less we seem to know about how they are built, what they are trained on, and how they truly work. This growing “transparency deficit” is at the heart of the most critical conversations in GPT Ethics News today. It’s a challenge that moves beyond academic debate, directly impacting developers, businesses, policymakers, and society at large. This article delves into the critical issue of transparency in AI, exploring why the creators of today’s most advanced systems are closing their doors, what the real-world consequences are, and how we can chart a course toward a more responsible and open future.

The Growing Transparency Deficit in Generative AI

The current landscape of AI development marks a significant departure from the field’s more collaborative and open roots. What was once a domain driven by academic research and a spirit of shared discovery has become a fiercely competitive commercial race. This shift has profound implications for transparency, directly impacting everything from GPT Safety News to the future of the GPT Open Source News community.

From Open Collaboration to Closed Competition

In the early days of deep learning, research papers often included detailed methodologies, architectures, and links to datasets. This openness fueled rapid innovation across the entire GPT Ecosystem News. However, as the financial stakes have skyrocketed, the industry’s biggest players have become increasingly secretive. The very name “OpenAI” has become ironic in the eyes of many, as the details behind their flagship models, from GPT-3.5 News to the latest GPT-4 News, are now closely guarded trade secrets. This secrecy is driven by a desire to protect intellectual property and maintain a competitive edge in a multi-billion dollar market. The result is a situation where the most influential technologies of our time are developed behind a veil of corporate confidentiality, leaving the broader community of researchers and developers to guess at the specifics of their creation.

Defining Transparency in the Age of GPT

When discussing transparency in AI, it’s crucial to understand that it encompasses more than just open-sourcing a model’s code. True, meaningful transparency for a large language model involves a multi-layered approach that provides insight into its entire lifecycle:

- Data Transparency: This is arguably the most critical component. What information is in the GPT Datasets News? Was the data ethically sourced? Does it include copyrighted material, private user data, or toxic content from the web? Without knowing the ingredients, we cannot fully understand the model’s potential biases, knowledge gaps, or harmful tendencies.

- Model and Architecture Transparency: This involves sharing detailed information about the GPT Architecture News, including the number of parameters, the specific training methods used, and any novel GPT Training Techniques News employed. It also includes data on the immense computational resources and GPT Hardware News required, which has significant environmental and economic implications.

- Evaluation and Alignment Transparency: How was the model tested? The latest GPT Benchmark News should be public, detailing performance on a wide range of tasks, especially those related to GPT Bias & Fairness News. Furthermore, the processes for safety alignment, such as Reinforcement Learning from Human Feedback (RLHF) and GPT Fine-Tuning News, should be documented. Who were the human raters, and what instructions were they given? This is fundamental to understanding a model’s purported safety features.

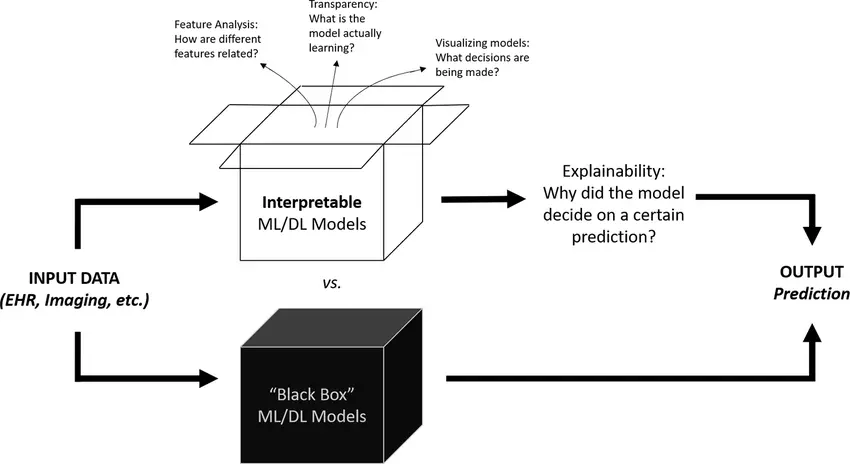

Deconstructing the “Black Box”: Key Areas of Obscurity

The term “black box” is often used to describe complex AI systems whose inner workings are opaque even to their creators. However, in the context of OpenAI GPT News and its competitors, a significant part of this obscurity is not inherent to the technology but is a result of deliberate corporate policy. Let’s break down the key areas where transparency is most lacking.

The Enigma of Training Data

The foundation of any GPT model is its training data. This data dictates its personality, its knowledge base, its linguistic style, and, most importantly, its inherent biases. The current industry standard is to scrape colossal amounts of text and images from the internet, a process fraught with ethical and legal peril. This directly impacts GPT Privacy News, as personal information from blogs, forums, and social media can be ingested without consent. It also raises copyright questions that are central to ongoing lawsuits and the latest GPT Regulation News. When a model used in GPT in Legal Tech News hallucinates a non-existent legal precedent, or a model in GPT in Healthcare News provides biased medical advice, the root cause often lies in the unvetted, mysterious training data. The lack of clarity here makes it impossible for external auditors to proactively identify and mitigate these risks.

Opaque Architectures and Alignment “Secret Sauce”

Beyond the data, the specific techniques used to build and refine these models are also kept under wraps. While we have general knowledge of transformer architectures, the exact innovations driving GPT Scaling News and performance improvements are trade secrets. This includes advancements in efficiency, such as GPT Compression News, GPT Quantization News, and GPT Distillation News, which are crucial for practical GPT Deployment News and enabling use cases on GPT Edge News devices. Similarly, the “secret sauce” of safety alignment is a major area of opacity. The precise methods used to steer a model away from generating harmful content are a key selling point, yet the process is not open to public scrutiny. This means we must take safety claims on faith, without the ability to independently verify the robustness of these critical guardrails. This is particularly concerning for the development of autonomous GPT Agents News, which are expected to perform complex tasks with minimal human oversight.

The Ripple Effect: The Real-World Consequences of AI Opacity

The lack of transparency is not an abstract academic problem; it has tangible, far-reaching consequences that affect the entire AI landscape, from independent researchers to enterprise users of GPT APIs News and developers creating GPT Custom Models News. The opacity of major AI labs creates a ripple effect that undermines trust, stifles innovation, and concentrates power.

Impeding Independent Research and Safety Audits

One of the most significant consequences of this secrecy is the chilling effect on independent research and auditing. Without access to the model weights, training data, or detailed architectural information, third-party researchers cannot conduct rigorous, independent studies. They cannot properly test for vulnerabilities, explore novel failure modes, or verify the safety claims made by the developers. This makes it incredibly difficult to get unbiased GPT Research News. The community is left to “poke the box from the outside” using public-facing interfaces like ChatGPT, which is an insufficient method for deep safety and bias analysis. This slows down the collective progress on AI safety and makes society as a whole more vulnerable to unforeseen risks as these models are integrated into critical systems.

Eroding Trust and Complicating Accountability

Trust is the currency of technological adoption. When developers of powerful AI systems are not forthcoming about their methods, it breeds suspicion and erodes public trust. For applications in sensitive fields like GPT in Finance News, where models might be used for credit scoring, or GPT in Education News, where they assist in grading, a lack of transparency is a non-starter. Furthermore, when an AI system causes harm—be it through generating misinformation, exhibiting discriminatory behavior, or enabling malicious use—opacity makes accountability nearly impossible. Was the failure due to biased data, a flaw in the alignment process, or an unforeseen emergent behavior? Without transparency, assigning responsibility becomes a futile exercise, leaving victims without recourse and developers without clear lessons on how to prevent future failures.

Stifling Competition and Open Innovation

The current trend towards secrecy creates a deep moat around a few dominant companies, stifling competition and hindering the broader innovation ecosystem. Startups, academics, and the open-source community cannot build upon, improve, or effectively compete with technologies they cannot inspect or understand. This dynamic affects everything from the development of new GPT Tools News and GPT Platforms News to advancements in GPT Inference Engines News. The promise of a democratized AI future, fueled by the GPT Open Source News movement, is threatened when foundational models are proprietary black boxes. This concentration of power not only limits consumer choice but also narrows the diversity of thought and values being embedded into the AI systems that will shape our future.

Charting a Course: Recommendations for a More Transparent AI Future

Addressing the transparency deficit requires a multi-pronged approach involving self-regulation by developers, thoughtful government oversight, and increased advocacy from the user community. The goal is not necessarily to force every model to be fully open source, but to establish a spectrum of transparency that is appropriate to a model’s power and potential for impact.

For AI Developers and Organizations

Leading AI labs must move beyond paying lip service to ethics and take concrete steps towards greater transparency. A practical approach is the adoption of “Model Cards” and “Datasheets,” standardized documents that provide detailed information about a model’s performance, limitations, and training data. Organizations should also invest in process transparency, documenting and sharing their methodologies for data curation, bias testing, and safety alignment. For high-stakes GPT Applications News, voluntarily submitting models to independent, third-party audits can build trust and demonstrate a genuine commitment to safety and ethics. This proactive stance is essential for maintaining public confidence in the face of rapid GPT Trends News.

For Policymakers and Regulators

Governments have a critical role to play in setting the floor for transparency. The latest GPT Regulation News from around the world indicates a growing appetite for such measures. Regulation should be risk-based, mandating higher levels of transparency for AI systems used in critical areas like healthcare, finance, and law. This could include requirements for data sourcing disclosures and independent auditing before a model can be deployed in a high-risk context. Policymakers can also create positive incentives, such as grants or tax benefits, for companies that exceed minimum transparency standards and contribute to the open-source ecosystem.

For the Broader AI Community

Finally, users, developers, and researchers must continue to advocate for transparency. By supporting and contributing to open-source alternatives, we can ensure a vibrant and competitive GPT Ecosystem News. When choosing AI tools and platforms, we should favor those that provide greater clarity about their inner workings. This market pressure can incentivize even the largest players to become more open about their products, from GPT Plugins News to enterprise-level GPT Integrations News.

Conclusion

The journey of generative AI is at a critical juncture. The incredible advancements in models from GPT-4 to the next generation promise to reshape our world in countless positive ways, from revolutionizing GPT in Content Creation News to accelerating scientific discovery. However, the current trajectory towards increasing secrecy threatens to undermine this potential. The transparency paradox—whereby our most powerful tools are also our most opaque—is unsustainable. It erodes trust, hinders safety research, and concentrates power in the hands of a few. The future of AI will be defined not just by the raw capabilities of our models, but by our ability to build them responsibly, ethically, and transparently. Shifting the conversation in GPT Ethics News from a peripheral concern to a central pillar of development is the most important task ahead for the entire AI community. The path to a trustworthy AI future is not through secrecy, but through a shared commitment to openness and accountability.