The 1 Million Token Horizon: How Massive Context Windows Are Revolutionizing GPT Agents

The Dawn of the Megabyte Memory: Redefining Agent Capabilities

In the rapidly evolving landscape of artificial intelligence, the concept of AI agents—autonomous systems capable of reasoning, planning, and executing complex tasks—has long been a coveted goal. However, a fundamental limitation has persistently constrained their potential: a limited “short-term memory,” technically known as the context window. Recent breakthroughs in large language model (LLM) architecture are shattering these constraints, ushering in an era of massive context windows, with some models now boasting the ability to process over one million tokens in a single prompt. This leap forward is more than an incremental update; it represents a paradigm shift, fundamentally altering the potential for GPT agents and their applications across every industry. This latest wave of GPT Agents News signals a transition from agents that require complex external memory systems to agents that can hold entire books, codebases, or extensive conversation histories in their active memory, paving the way for unprecedented levels of coherence and analytical depth. This development is a cornerstone of current GPT Trends News and offers a tantalizing glimpse into the GPT Future News that awaits.

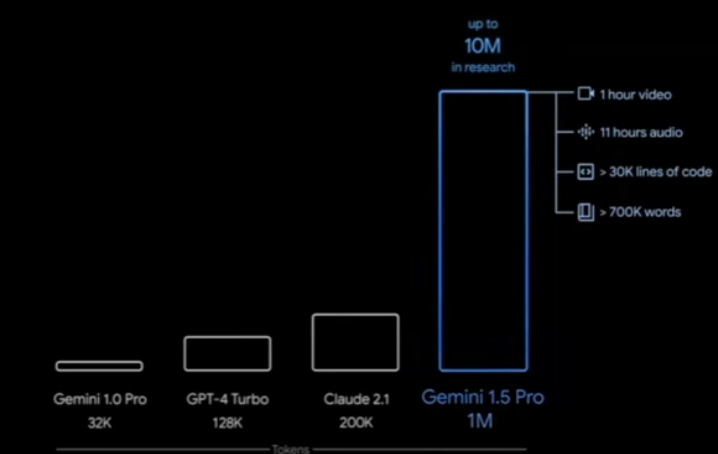

The “Long Context Wars” have become a central battleground for AI supremacy, with major players like OpenAI, Anthropic, Google, and others pushing the boundaries of what’s possible. While earlier models like GPT-3.5 operated with a few thousand tokens and GPT-4 expanded this to 128,000, the new frontier is an order of magnitude larger. This exponential growth is not merely about fitting more words into a prompt; it’s about enabling a new class of reasoning. It reduces the reliance on complex and often brittle techniques like Retrieval-Augmented Generation (RAG), where agents must constantly fetch information from an external database. Instead, agents can now perform “in-context learning” on a massive scale. This shift in GPT Architecture News is a direct result of advancements in transformer efficiency and GPT Scaling News, making it a pivotal moment for developers and businesses looking to build the next generation of intelligent systems. As the community eagerly awaits GPT-5 News, the expansion of context windows is seen as a critical feature that will define the next generation of foundation models and the capabilities of the entire GPT Ecosystem News.

From Fragmented Tasks to Coherent, Long-Term Reasoning

The technical implications of a million-token context window are profound, directly empowering GPT agents to move beyond simple, stateless tasks and engage in complex, long-term projects with full situational awareness. This enhancement is a significant piece of OpenAI GPT News and a topic of discussion for anyone following the GPT APIs News, as it changes how developers will interact with these powerful models.

Unlocking “Single-Shot” Analysis of Massive Datasets

Previously, analyzing a large document or an entire software repository required a developer to “chunk” the data into smaller pieces, process each one individually, and then attempt to synthesize the results—a process prone to losing critical context. With a massive context window, an agent can ingest an entire dataset in one go. For developers, this is transformative GPT Code Models News. An agent can now be fed an entire legacy codebase to identify complex interdependencies, find deeply buried security vulnerabilities, suggest holistic refactoring strategies, and generate comprehensive, context-aware documentation. This capability extends to any text-heavy domain. A legal agent can review a 500-page merger agreement, a financial agent can analyze years of quarterly earnings reports, and a research agent can consume a dense medical textbook to answer nuanced questions, all within a single, coherent analytical frame. This also brings GPT Tokenization News to the forefront, as efficient and meaningful tokenization is crucial for models to effectively interpret such vast amounts of information.

Enhancing Multi-Turn Consistency and Statefulness

One of the biggest challenges for conversational AI and long-running agents has been maintaining state. After a few turns, models would often “forget” earlier parts of the conversation, leading to frustrating and repetitive interactions. A million-token context window effectively solves this for most practical purposes. This is groundbreaking GPT Assistants News, as it allows for the creation of truly personalized and stateful companions. An AI tutor can remember every question a student has ever asked, a corporate assistant can recall every detail from weeks of project meetings, and sophisticated GPT Chatbots can maintain a consistent persona and memory over thousands of interactions. This native statefulness simplifies the development stack, reducing the need for external vector databases and complex memory management logic, which is welcome GPT Tools News for developers building agentic systems.

The Rise of In-Context Fine-Tuning and Multimodality

Massive context windows enable a powerful form of on-the-fly specialization that blurs the lines with traditional model training. This is a key development in GPT Fine-Tuning News. Instead of spending significant resources on fine-tuning a model, a developer can now provide extensive documentation, style guides, and hundreds of examples directly in the prompt. The model uses this information “in-context” to adapt its behavior for a specific task, effectively creating a temporary expert. Furthermore, as these capabilities merge with GPT Multimodal News, the potential explodes. Imagine an agent that can process a feature-length film with its script, a complex architectural plan with all its engineering specifications, or a patient’s entire medical history including text notes, lab results, and radiological images. This convergence, a hot topic in GPT Vision News, will allow agents to reason across different data types within a single, unified context, unlocking applications we are only just beginning to imagine.

Transforming Industries: GPT Agents with Unprecedented Context

The theoretical benefits of massive context windows translate directly into tangible, industry-disrupting applications. The ability to process and reason over vast, domain-specific documents in a single pass is set to revolutionize knowledge work and create new efficiencies. This wave of GPT Applications News is already inspiring a new generation of startups and enterprise solutions.

Legal and Financial Document Analysis

In the legal and financial sectors, professionals spend countless hours reviewing dense, lengthy documents. The latest GPT in Legal Tech News highlights agents that can ingest entire case files, discovery documents, or complex contracts to identify key clauses, risks, and precedents in minutes instead of days. For instance, a compliance agent can analyze a new piece of financial regulation against a bank’s entire internal policy manual to flag potential conflicts. This level of analysis, a focus of GPT in Finance News, allows for deeper due diligence and risk assessment, fundamentally changing the workflow for analysts and lawyers.

Enterprise Codebase Management and Development

For large enterprises, legacy codebases are often a major bottleneck to innovation. An agent with a million-token context can become the ultimate technical debt navigator. A developer can ask questions like, “Where in our 3 million lines of code is user authentication handled, and what are the potential downstream impacts of changing this module?” This not only accelerates debugging and modernization but also serves as an invaluable tool for onboarding new engineers. This practical application is a significant driver of GPT Deployment News, as companies seek to leverage AI for tangible engineering productivity gains.

Scientific Research and Healthcare

The pace of scientific discovery is often limited by the human capacity to consume and synthesize the ever-growing body of research. The latest GPT in Healthcare News explores agents capable of reading hundreds of research papers on a specific gene or disease to formulate novel hypotheses or identify contradictory findings. A clinical agent could review a patient’s complete longitudinal health record—including doctor’s notes, lab results, and genomic data—to suggest personalized treatment plans. This is a major breakthrough for GPT Research News, potentially accelerating the path from discovery to clinical application.

Hyper-Personalized Content and Education

The ability to hold a user’s entire history in context is a game-changer for personalization. In education, this is leading to exciting GPT in Education News, with AI tutors that can adapt to a student’s unique learning style, strengths, and weaknesses based on every interaction they’ve ever had. In media, this is shaping GPT in Content Creation News, where agents can help create dynamic, branching narratives in gaming or write marketing copy that is hyper-personalized to an individual’s long-term engagement history, a key topic in GPT in Marketing News and GPT in Gaming News.

The Million-Token Minefield: Costs, Latency, and Safety

While the promise of massive context windows is immense, navigating this new frontier comes with significant technical and ethical challenges. The excitement around these capabilities must be tempered with a realistic understanding of the practical hurdles involved in their deployment and a commitment to responsible innovation.

The “Needle in a Haystack” Problem and Performance

A larger context window does not automatically guarantee perfect recall. Research has shown that models can sometimes struggle to identify relevant information when it’s buried in the middle of a vast amount of text—the so-called “needle in a haystack” problem. This is a critical area of focus for current GPT Benchmark News. Furthermore, the computational cost and latency associated with processing a million tokens are substantial. The latest GPT Inference News reveals a direct trade-off between context size and response time. Making these models practical requires significant advances in GPT Optimization News, including techniques like GPT Quantization and GPT Distillation, as well as specialized infrastructure, a key topic in GPT Hardware News and for developers of GPT Inference Engines.

Ethical, Privacy, and Security Considerations

The power to process vast amounts of information in-context amplifies existing ethical concerns. An agent holding a user’s entire email history or a company’s complete confidential document archive represents a massive security and privacy risk. This makes GPT Privacy News and GPT Safety News more critical than ever. A single data breach could be catastrophic. Moreover, the potential for deeply embedded biases to influence an agent’s reasoning over a large corpus is a significant challenge for GPT Bias & Fairness News. As these powerful agents become more integrated into critical decision-making processes, the need for robust oversight, transparent governance, and clear guidelines from GPT Regulation News becomes paramount to ensure they are used safely and equitably.

Conclusion: The Agentic Future is Long-Form

The emergence of million-token context windows is a landmark event in the story of artificial intelligence. It marks a fundamental evolution in the capabilities of GPT agents, transforming them from tools that execute fragmented instructions into coherent partners capable of tackling complex, long-form projects. This leap forward unlocks a new echelon of applications in law, finance, software development, and scientific research, enabling a depth of analysis and stateful interaction that was previously impossible. While significant challenges related to cost, performance, and safety remain, the trajectory is clear. The future of AI is not just about more intelligent models, but about models with the memory and endurance to understand our world in its full, unabridged context. For developers, businesses, and society at large, the key takeaway from the latest GPT Agents News is that the era of the long-form, context-aware AI agent has truly begun.