The Multimodal Revolution: How New GPT Vision APIs are Redefining AI Interaction and Content Creation

The artificial intelligence landscape has been dominated by the remarkable capabilities of large language models (LLMs), with systems like ChatGPT demonstrating an uncanny ability to understand and generate human-like text. For years, the focus has been on mastering language. However, the latest GPT Models News signals a monumental shift, moving beyond mere text to embrace a far richer, more human-centric form of interaction: multimodality. We are entering an era where AI can not only read and write but also see, interpret, and create visual content with astonishing fidelity. This fusion of language and vision is not an incremental update; it is a paradigm shift, powered by new architectures that are fundamentally changing how we develop applications, conduct business, and express creativity. The latest advancements in GPT Multimodal News, particularly the emergence of powerful, unified text-and-image APIs, are unlocking possibilities previously confined to science fiction, setting the stage for the next wave of AI-driven innovation.

The Architectural Leap: Understanding the New Multimodal GPTs

The recent breakthroughs in multimodal AI represent a significant departure from previous-generation systems. Understanding this architectural evolution is key to grasping the magnitude of the current transformation and the future trajectory hinted at in recent GPT-4 News and speculative GPT-5 News.

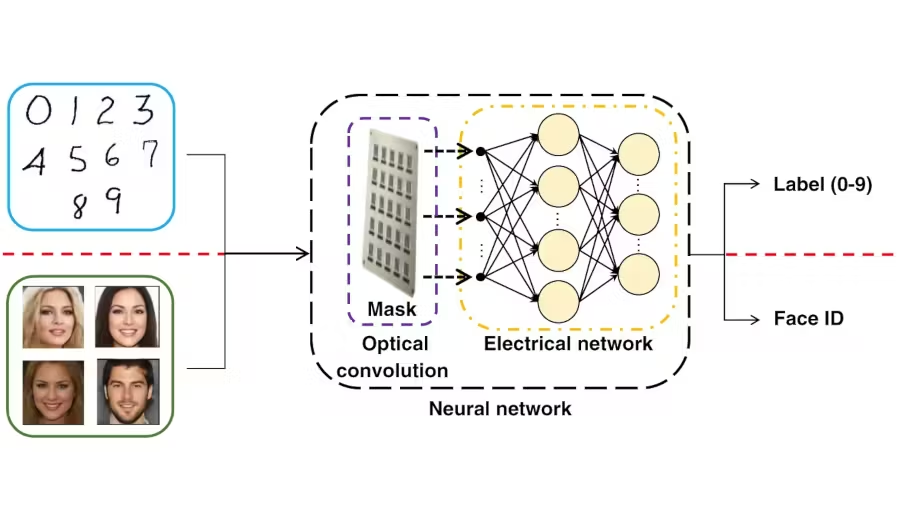

From Separate Silos to Unified Understanding

Historically, AI models handled different data types in isolated silos. A text-generating model like GPT-3.5 was architecturally distinct from an image-generating model like DALL-E 2. While they could be chained together, they didn’t share a core understanding. The latest GPT Architecture News reveals a move towards a unified model. These new systems are built on a single, cohesive architecture that can process and generate both text and visual data within the same “latent space”—a high-dimensional mathematical representation of concepts. This is achieved through sophisticated techniques like joint embedding, where text tokens and image patches are projected into a shared space, and cross-attention mechanisms, which allow the model to correlate specific words in a prompt with specific regions or concepts in an image. This unified approach means the model doesn’t just translate text to pixels; it develops a deeper, more contextual understanding of how visual and linguistic concepts relate, a core topic in ongoing GPT Research News.

Key Technical Specifications and Performance Benchmarks

While exact figures for proprietary models remain closely guarded, insights from research papers and API documentation point to several key advancements. The training of these models involves unprecedented scale, a constant theme in GPT Scaling News. They are trained on massive, curated datasets containing billions of image-text pairs, far exceeding the scope of earlier GPT Datasets News. This extensive training, leveraging novel GPT Training Techniques News, is what enables their nuanced understanding.

Performance is measured on a variety of tasks. According to the latest GPT Benchmark News, these new models show state-of-the-art results on benchmarks like Visual Question Answering (VQA), where the AI answers questions about an image, and text-to-image generation fidelity, often measured by CLIP scores. From a developer’s perspective, the GPT Inference News is equally critical. Despite their size, significant progress in GPT Optimization News—including techniques like GPT Quantization and GPT Distillation—has led to manageable GPT Latency & Throughput News. For instance, a new-generation API might generate a 1024×1024 pixel image in under two seconds, a crucial threshold for real-time applications. This efficiency is also a testament to advancements in specialized GPT Hardware News and the underlying GPT Inference Engines.

Real-World Applications: Transforming Industries with Visual AI

The theoretical advancements in multimodal AI are impressive, but their true impact is realized when applied to solve real-world problems. The latest wave of GPT Applications News demonstrates a rapid transition from research to production across numerous sectors, creating tangible value and disrupting traditional workflows.

Marketing and E-commerce: Hyper-Personalized Visuals

The impact on marketing is immediate and profound. According to recent GPT in Marketing News, brands are moving beyond static product catalogs to dynamic, AI-generated visual experiences. Consider an online furniture store. Instead of a few standard photos of a sofa, a customer can now enter a prompt like, “Show me this navy blue sectional sofa in a brightly lit, modern farmhouse living room with oak floors and a large window.” The API generates this specific scene on the fly. This capability allows for:

- Infinite A/B Testing: Marketers can generate hundreds of ad creative variations to test which visual elements resonate most with different demographics.

- Personalized Catalogs: E-commerce platforms can tailor product imagery to a user’s known style preferences, dramatically increasing engagement and conversion rates.

- Rapid Campaign Prototyping: Marketing teams can visualize entire campaigns in minutes, iterating on concepts without the need for expensive photoshoots.

This represents a fundamental shift in how visual content is produced and consumed in the commercial world.

Content Creation and Media: A New Creative Partner

For creators, these tools are not replacements but powerful collaborators. The latest GPT in Content Creation News highlights how authors, bloggers, and journalists can now generate perfectly matched editorial images for their articles in real-time. A writer describing a futuristic cityscape can create that exact image to accompany their text, enhancing the reader’s immersion. This has major implications for the stock photography industry and empowers individual creators with the visual capabilities of a large studio. In the realm of GPT in Creativity News, artists are using these models as a starting point for inspiration, rapidly exploring complex visual ideas before refining them with traditional tools. The same technology is also making waves in GPT in Gaming News, where it can be used to generate unique textures, character concepts, and environmental assets on demand.

Specialized Fields: From Healthcare to Finance

The application of GPT Vision News extends far beyond creative industries.

- GPT in Healthcare News: A radiologist could use an AI assistant to analyze a medical scan and verbally ask, “Highlight all potential microfractures in this X-ray and generate a 3D model.” The AI could then produce an annotated image and a manipulable model for better diagnosis.

- GPT in Legal Tech News: Lawyers can use multimodal AI to create visual timelines of events from dense legal documents, making complex cases easier to understand for juries.

- GPT in Finance News: Analysts can generate charts and infographics from complex market data simply by describing the trends they want to visualize, a key area of development for GPT Assistants News.

These specialized applications showcase the technology’s versatility and its potential to enhance precision and efficiency in high-stakes professions.

Developer Deep Dive: Integration, Best Practices, and Pitfalls

For developers, the release of robust multimodal APIs is a game-changer. The latest GPT APIs News indicates a focus on ease of use and powerful customization, allowing for seamless integration into new and existing applications. However, harnessing their full potential requires understanding best practices and avoiding common pitfalls.

Working with the New GPT Vision APIs

Integrating these new capabilities is often surprisingly straightforward. A typical API endpoint might accept a JSON payload with a text prompt and various parameters to control the output. The latest GPT Integrations News shows developers embedding these calls directly into their backend services, web apps, and even mobile applications.

A hypothetical Python code snippet might look like this:

import requests

import json

API_KEY = "YOUR_API_KEY"

API_URL = "https://api.openai.com/v2/images/generations"

headers = {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

}

data = {

"model": "gpt-image-pro",

"prompt": "A photorealistic, cinematic shot of a vintage red sports car driving on a coastal road at sunset, dramatic lighting, high detail",

"n": 1,

"size": "1024x1024",

"quality": "hd",

"style": "vivid"

}

response = requests.post(API_URL, headers=headers, data=json.dumps(data))

image_url = response.json()['data'][0]['url']

print(f"Generated Image URL: {image_url}")

This simplicity is a key driver of adoption, as highlighted in much of the ChatGPT News surrounding new feature releases.

Best Practices for Effective Prompt Engineering

The quality of the output is directly proportional to the quality of the input. Effective prompt engineering is an art and a science.

- Be Hyper-Specific: Instead of “a dog,” use “a close-up portrait of a golden retriever puppy, happy expression, sitting in a field of wildflowers, soft morning light.”

- Use Adjectives for Style: Words like “photorealistic,” “cinematic,” “impressionistic,” “vaporwave,” or “in the style of Van Gogh” guide the model’s aesthetic.

- Control Composition: Specify the shot type (“wide-angle,” “macro shot”) and the placement of objects (“a castle on a hill in the background”).

- Leverage Negative Prompts: Many APIs allow you to specify what to exclude (e.g., `negative_prompt: “blurry, text, watermark, ugly”`). This is crucial for cleaning up results.

Common Pitfalls and How to Avoid Them

While powerful, these models are not infallible. Developers should be aware of potential issues discussed in GPT Safety News.

- Anatomical Inconsistencies: AI can still struggle with details like hands or complex machinery. Iterative generation or inpainting can help correct these flaws.

- Prompt Ambiguity: A vague prompt will yield a generic or unexpected result. Always refine and add detail.

- Ignoring Ethical Guardrails: Attempting to generate harmful, biased, or explicit content will be blocked by safety filters. Understanding the usage policies is crucial. This is a central topic in GPT Ethics News and GPT Bias & Fairness News.

- Cost and Latency: High-resolution image generation can be computationally expensive. For real-time GPT Deployment News, developers must implement caching strategies, queueing systems, and monitor API costs closely.

The Broader Context: Ethics, Competition, and the Future

The rapid advancement of multimodal AI doesn’t happen in a vacuum. It is surrounded by a complex ecosystem of competitors, ethical considerations, and future possibilities that will shape its development for years to come.

Navigating the Ethical Landscape

With great power comes great responsibility. The ability to generate realistic images from text prompts raises significant ethical questions, a constant theme in GPT Regulation News and GPT Privacy News. The potential for creating convincing misinformation, non-consensual deepfakes, and perpetuating societal biases found in training data is a major concern. Leading AI labs are actively working on mitigation strategies, including:

- Content Provenance: Implementing invisible watermarks or metadata standards (like C2PA) to certify that an image is AI-generated.

- Robust Safety Filters: Continuously updating models and API-level filters to prevent the generation of harmful content.

- Bias Auditing: Proactively analyzing models for biases and using techniques from the latest GPT Fine-Tuning News to mitigate them.

The Competitive Ecosystem

While OpenAI is a major player, the field is bustling with innovation. The GPT Competitors News is rich with powerful alternatives like Midjourney, known for its artistic flair, and Google’s Imagen and Parti models. A key differentiator in the GPT Ecosystem News is the divide between closed-source APIs and the vibrant GPT Open Source News community, led by models like Stable Diffusion. Open-source models offer unparalleled flexibility and control for developers willing to manage their own infrastructure, while closed APIs provide ease of use, scalability, and integrated safety systems. This competitive tension between open and closed platforms is a powerful catalyst for innovation across the entire industry.

What’s Next? Towards True General Intelligence

This is just the beginning. The current GPT Trends News points towards an even more integrated future. The next frontier involves adding more modalities: audio, video, and even 3D generation. We can anticipate AI assistants that can watch a video, summarize its content, and then generate a short, animated clip illustrating the key points. The development of GPT Agents News describes systems that can not only perceive their digital environment through vision but also take actions, such as navigating a website to complete a task. Each step brings us closer to more capable and general-purpose AI, with the ultimate goal of creating systems that can understand and interact with the world in all its rich, multimodal complexity. The GPT Future News promises a world where the boundary between human and machine creativity becomes increasingly blurred.

Conclusion

The advent of high-fidelity, API-driven multimodal AI marks a pivotal moment in the evolution of artificial intelligence. We have moved beyond the confines of text to a new frontier where AI can see, understand, and create visually, mirroring human cognition more closely than ever before. For developers, this opens up a vast new design space for building more intuitive and powerful applications. For businesses, it offers unprecedented tools for personalization, marketing, and process automation. For creators, it provides a revolutionary new medium for artistic expression. While navigating the significant ethical and safety challenges is paramount, the trajectory is clear. The fusion of language and vision is not just the latest trend in OpenAI GPT News; it is a foundational building block for the next generation of intelligent systems that will redefine our relationship with technology.